贝特雷金

自我介绍

我在信息技术领域工作超 10 年的时间里,在不同领域积累了丰富的技能和经验。

虚拟化和云计算:

- 熟练使用 VMware 技术,包括 vCenter、HA(高可用性)、vSAN(分布式存储)、虚拟机备份等。

- 在公有云环境中,有丰富的使用经验,如:Aliyun、QingCloud、 AWS or GCP。

服务器和网络管理:

- 熟练使用各 Linux 发行版 Os,以及常用服务器应用如 DHCP、DNS、NFS、SMB 和 iSCSI、NTP/Chronyd。

- Centos/Redhat/Rocklinux

- Ubantu/Linux Mint

- 网络配置和故障排除经验,确保服务器间、应用程序的连通性。

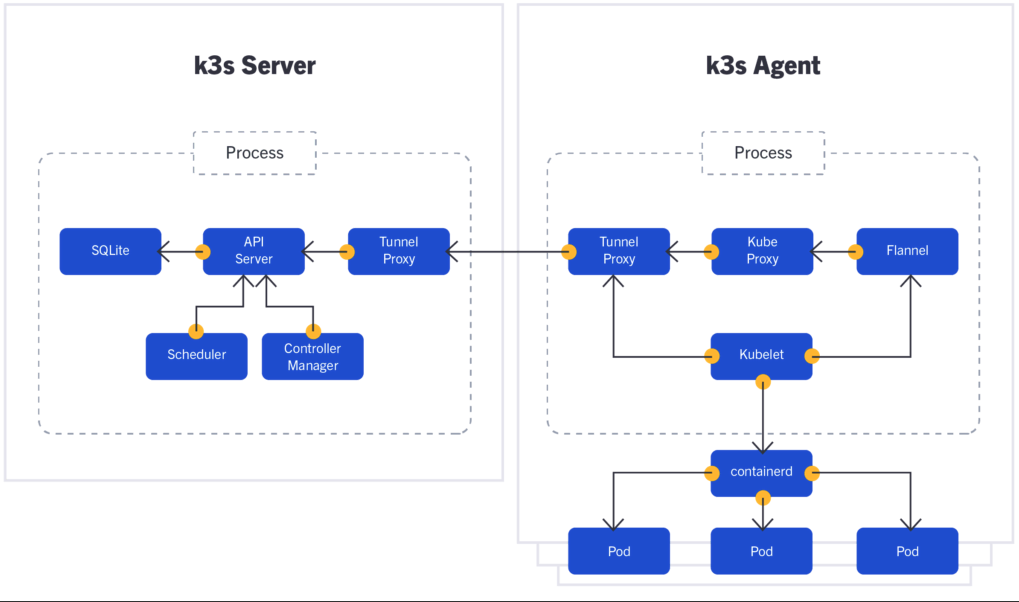

容器化和编排:

- 熟练使用 Kubernetes 和 Cri,能够协助容器化改造、部署、维护。

- docker、podman、containerd、jenkins

- 熟练使用 Helm 等工具来简化 Kubernetes 应用部署更新。

监控和性能优化:

- 熟练使用 Prometheus 和 Grafana 进行监控和性能分析,及通过 Alermanger 及时告警通知,来确保系统的稳定和性能。

- 通过日志分析和指标监控实施自动化故障检测和预防措施。

- 轻度使用 efk、loki、skywallking

持续集成和持续交付(CI/CD):

- 在 Jenkins 和 ArgoCD 中拥有经验,以构建自动化的应用发布流程。

- 整合 GitLab 作为代码管理和协作工具,促进开发团队的协作。

总结而言,我是一名经验丰富的 IT 运维专业人员,擅长虚拟化、云计算、容器化、监控和自动化等多个领域。我的目标是确保系统的高可用性、性能和安全性,并通过持续改进和自动化流程来提高效率。我更热爱学习和不断发展,始终追求在不断变化的 IT 领域中保持竞争力。

使用 GitHub Pages 快速发布

建站 参考链接

Git 安装、初始化

- Mac 中 Git 安装与 GitHub 基本使用

- 生成新的 SSH-key 并且添加到 ssh-agent

- 每年需要更新下 token,在个人用户 setting 中 dev开发这里面创建 token,并设置某个 repo 的访问权限,然后 git clone 时使用 https,git push 提交时提示输入用户密码/密码就是我们创建的 token

记 Kubernetes 1.28.1 之 Kubeadm 安装过程 - 单 master 集群

节点初始化配置

-

更改主机名配置

hostshostnamectl set-hostname --static k8s-master hostnamectl set-hostname --static k8s-worker01 echo '10.2x.2x9.6x k8s-master' >> /etc/hosts echo '10.2x.2x9.6x k8s-worker01' >> /etc/hosts -

禁用

firewalld、selinux、swapsystemctl stop firewalld && systemctl disable firewalld sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config && setenforce 0 && getenforce swapoff -a && sed -i 's@/dev/mapper/centos-swap@#/dev/mapper/centos-swap@g' /etc/fstab -

系统优化

- 加载模块 cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf overlay br_netfilter ip_vs ip_vs_rr ip_vs_wrr ip_vs_sh nf_conntrack_ipv4 EOF modprobe -- ip_vs modprobe -- ip_vs_rr modprobe -- ip_vs_wrr modprobe -- ip_vs_sh modprobe -- nf_conntrack_ipv4 modprobe -- overlay modprobe -- br_netfilter - 检查是否生效 lsmod | grep ip_vs && lsmod | grep nf_conntrack_ipv4 - 配置 ipv4 转发内核参数 cat > /etc/sysctl.d/k8s.conf << EOF net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 vm.swappiness = 0 EOF sysctl -p && sysctl --system - 检查内核参数是否生效 sysctl net.bridge.bridge-nf-call-iptables net.bridge.bridge-nf-call-ip6tables net.ipv4.ip_forward -

其余配置

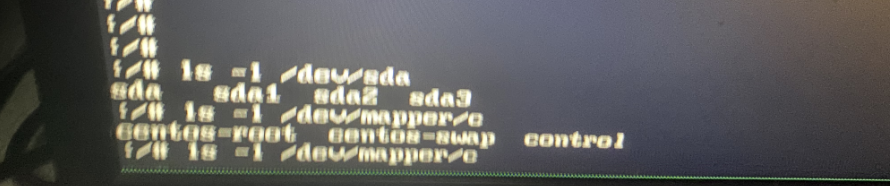

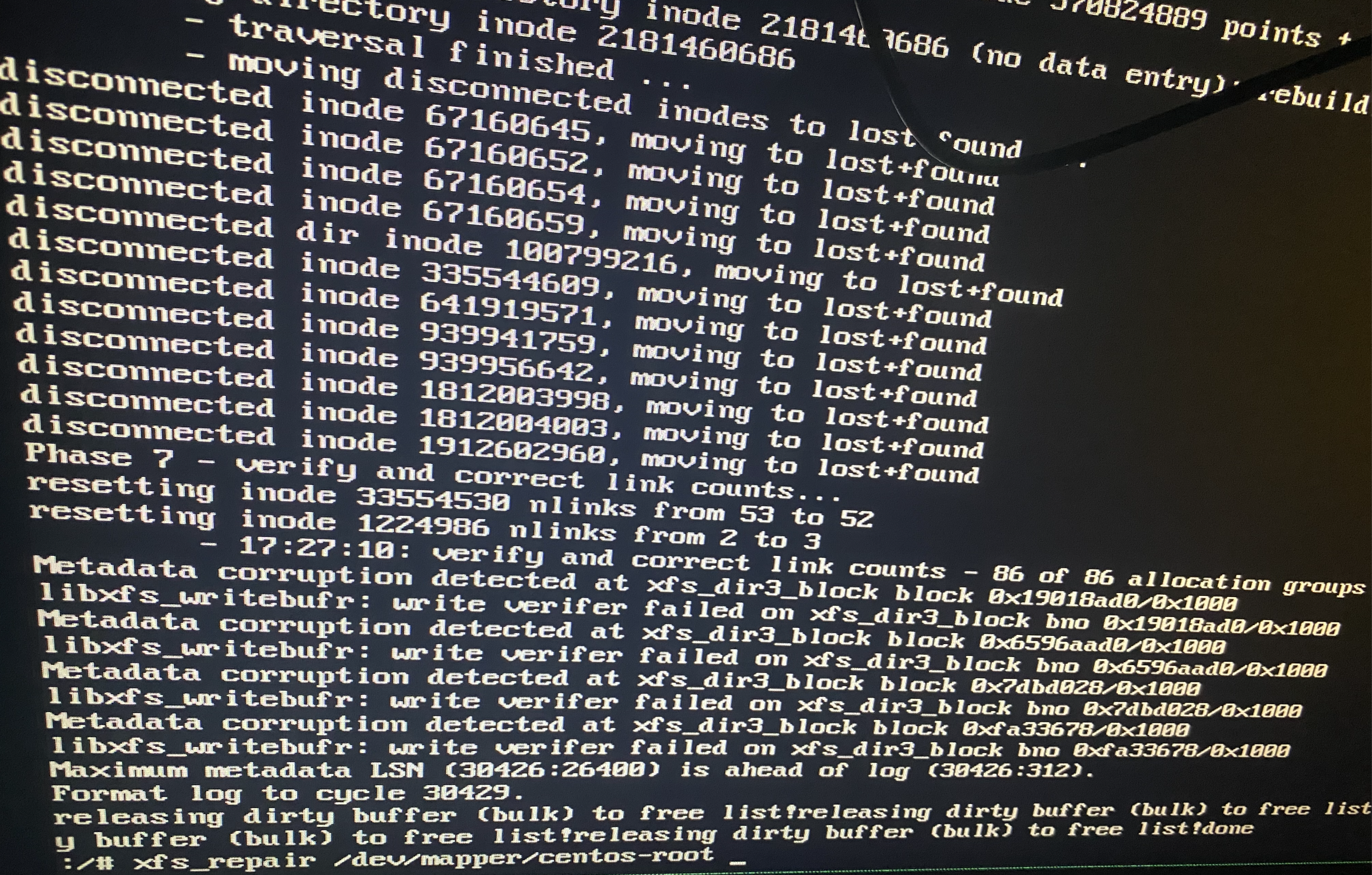

- 根分区扩容 # 可选步骤 lsblk pvcreate /dev/sdb vgextend centos /dev/sdb lvextend -L +99G /dev/mapper/centos-root xfs_growfs /dev/mapper/centos-root - 配置阿里源 wget -O /etc/yum.repos.d/epel.repo https://mirrors.aliyun.com/repo/epel-7.repo - 安装常用工具 yum install -y ipvsadm ipset sysstat conntrack libseccomp wget git net-tools bash-completion

安装必要组件

-

-

cgroup

-

cgroupfs 驱动:是 kubelet 中默认的 cgroup 驱动。 当使用 cgroupfs 驱动时, kubelet 和容器运行时将直接对接 cgroup 文件系统来配置 cgroup

-

systemd 驱动:某个 Linux 系统发行版使用 systemd 作为其初始化系统时,初始化进程会生成并使用一个 root 控制组(cgroup),并充当 cgroup 管理器

-

同时存在两个 cgroup 管理器将造成系统中针对可用的资源和使用中的资源出现两个视图。某些情况下, 将 kubelet 和容器运行时配置为使用

cgroupfs、但为剩余的进程使用systemd的那些节点将在资源压力增大时变得不稳定,所以我们要保证 kubelet 和 docker 的驱动跟系统保持一致,均为 systemd

-

-

安装 containerd

- 解压并将二进制文件放入 /usr/local/ 目录下 tar Cxzvf /usr/local containerd-1.7.5-linux-amd64.tar.gz bin/ bin/containerd-shim-runc-v2 bin/containerd-shim bin/ctr bin/containerd-shim-runc-v1 bin/containerd bin/containerd-stress - 配置systemd # 默认会生成 vi /usr/lib/systemd/system/containerd.service [Unit] Description=containerd container runtime Documentation=https://containerd.io After=network.target local-fs.target [Service] #uncomment to enable the experimental sbservice (sandboxed) version of containerd/cri integration #Environment="ENABLE_CRI_SANDBOXES=sandboxed" ExecStartPre=-/sbin/modprobe overlay ExecStart=/usr/local/bin/containerd Type=notify Delegate=yes KillMode=process Restart=always RestartSec=5 # Having non-zero Limit*s causes performance problems due to accounting overhead # in the kernel. We recommend using cgroups to do container-local accounting. LimitNPROC=infinity LimitCORE=infinity LimitNOFILE=infinity # Comment TasksMax if your systemd version does not supports it. # Only systemd 226 and above support this version. TasksMax=infinity OOMScoreAdjust=-999 [Install] WantedBy=multi-user.target - 生成默认配置文件 mkdir -p /etc/containerd/ containerd config default >> /etc/containerd/config.toml - cgroup 驱动更改为 systemd vi /etc/containerd/config.toml [plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc] ... [plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options] SystemdCgroup = true - 修改 containerd 默认的 pause 镜像 # 默认为境外镜像由于网络问题需要更改为国内源 vi /etc/containerd/config.toml [plugins."io.containerd.grpc.v1.cri"] sandbox_image = "k8s.m.daocloud.io/pause:3.9" # 更改为 k8s.m.daocloud.io,默认为 registry.k8s.io - 重启 containerd systemctl daemon-reload && systemctl restart containerd- 安装 runc

install -m 755 runc.amd64 /usr/local/sbin/runc- 安装 cni - 建议不执行、安装 kubelet 时会自动安装(使用最新的 cni,可能会出现兼容性问题)

mkdir -p /opt/cni/bin tar Cxzvf /opt/cni/bin cni-plugins-linux-amd64-v1.3.0.tgz ./ ./macvlan ./static ./vlan ./portmap ./host-local ./vrf ./bridge ./tuning ./firewall ./host-device ./sbr ./loopback ./dhcp ./ptp ./ipvlan ./bandwidth -

-

安装 kubeadm、kubelet、kubectl kubelet 配置文件

- 配置 kubernests 源并安装

[root@k8s-master yum.repos.d]# cat <<EOF > /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF - 查看对应组件版本并指定安装版本 # 可选 [root@k8s-master yum.repos.d]# yum list kubeadm --showduplicates [root@k8s-master yum.repos.d]# yum list kubectl --showduplicates [root@k8s-master yum.repos.d]# yum install --setopt=obsoletes=0 kubeadm-1.17.4-0 kubelet-1.17.4-0 kubectl-1.17.4-0 -y - 安装 kubeadm、kubectl 组件 [root@k8s-master yum.repos.d]# yum install --setopt=obsoletes=0 kubeadm-1.28.2-0 kubelet-1.28.2-0 kubectl-1.28.2-0 -y Loaded plugins: fastestmirror Loading mirror speeds from cached hostfile * base: mirrors.ustc.edu.cn * extras: mirrors.ustc.edu.cn * updates: ftp.sjtu.edu.cn base | 3.6 kB 00:00:00 extras | 2.9 kB 00:00:00 kubernetes | 1.4 kB 00:00:00 updates | 2.9 kB 00:00:00 (1/5): base/7/x86_64/group_gz | 153 kB 00:00:00 (2/5): extras/7/x86_64/primary_db | 250 kB 00:00:00 (3/5): kubernetes/primary | 136 kB 00:00:00 (4/5): updates/7/x86_64/primary_db | 22 MB 00:00:02 (5/5): base/7/x86_64/primary_db | 6.1 MB 00:00:13 kubernetes 1010/1010 Resolving Dependencies --> Running transaction check ---> Package kubeadm.x86_64 0:1.28.1-0 will be installed --> Processing Dependency: kubernetes-cni >= 0.8.6 for package: kubeadm-1.28.1-0.x86_64 --> Processing Dependency: cri-tools >= 1.19.0 for package: kubeadm-1.28.1-0.x86_64 ---> Package kubectl.x86_64 0:1.28.1-0 will be installed ---> Package kubelet.x86_64 0:1.28.1-0 will be installed --> Processing Dependency: socat for package: kubelet-1.28.1-0.x86_64 --> Processing Dependency: conntrack for package: kubelet-1.28.1-0.x86_64 --> Running transaction check ---> Package conntrack-tools.x86_64 0:1.4.4-7.el7 will be installed --> Processing Dependency: libnetfilter_cttimeout.so.1(LIBNETFILTER_CTTIMEOUT_1.1)(64bit) for package: conntrack-tools-1.4.4-7.el7.x86_64 --> Processing Dependency: libnetfilter_cttimeout.so.1(LIBNETFILTER_CTTIMEOUT_1.0)(64bit) for package: conntrack-tools-1.4.4-7.el7.x86_64 --> Processing Dependency: libnetfilter_cthelper.so.0(LIBNETFILTER_CTHELPER_1.0)(64bit) for package: conntrack-tools-1.4.4-7.el7.x86_64 --> Processing Dependency: libnetfilter_queue.so.1()(64bit) for package: conntrack-tools-1.4.4-7.el7.x86_64 --> Processing Dependency: libnetfilter_cttimeout.so.1()(64bit) for package: conntrack-tools-1.4.4-7.el7.x86_64 --> Processing Dependency: libnetfilter_cthelper.so.0()(64bit) for package: conntrack-tools-1.4.4-7.el7.x86_64 ---> Package cri-tools.x86_64 0:1.26.0-0 will be installed ---> Package kubernetes-cni.x86_64 0:1.2.0-0 will be installed ---> Package socat.x86_64 0:1.7.3.2-2.el7 will be installed --> Running transaction check ---> Package libnetfilter_cthelper.x86_64 0:1.0.0-11.el7 will be installed ---> Package libnetfilter_cttimeout.x86_64 0:1.0.0-7.el7 will be installed ---> Package libnetfilter_queue.x86_64 0:1.0.2-2.el7_2 will be installed --> Finished Dependency Resolution Dependencies Resolved ================================================================================================================================================================ Package Arch Version Repository Size ================================================================================================================================================================ Installing: kubeadm x86_64 1.28.1-0 kubernetes 11 M kubectl x86_64 1.28.1-0 kubernetes 11 M kubelet x86_64 1.28.1-0 kubernetes 21 M Installing for dependencies: conntrack-tools x86_64 1.4.4-7.el7 base 187 k cri-tools x86_64 1.26.0-0 kubernetes 8.6 M kubernetes-cni x86_64 1.2.0-0 kubernetes 17 M # cni 会安装 /opt/cni/bin/ 网络插件,也就是当前 k8s 版本所兼容的 libnetfilter_cthelper x86_64 1.0.0-11.el7 base 18 k libnetfilter_cttimeout x86_64 1.0.0-7.el7 base 18 k libnetfilter_queue x86_64 1.0.2-2.el7_2 base 23 k socat x86_64 1.7.3.2-2.el7 base 290 k Transaction Summary ================================================================================================================================================================ Install 3 Packages (+7 Dependent packages) Total download size: 69 M Installed size: 292 M Is this ok [y/d/N]: y # y 进行安装即可⚠️:由于官网未开放同步方式, 可能会有 gpg 检查失败的情况, 请用

yum install -y --nogpgcheck kubelet kubeadm kubectl安装来规避 gpg-key 的检查- 启动 kubelet

- 启动 kubelet 服务 [root@k8s-master ~]# systemctl enable kubelet && systemctl start kubelet && systemctl status kubelet Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service. ● kubelet.service - kubelet: The Kubernetes Node Agent Loaded: loaded (/usr/lib/systemd/system/kubelet.service; enabled; vendor preset: disabled) Drop-In: /usr/lib/systemd/system/kubelet.service.d └─10-kubeadm.conf Active: active (running) since Thu 2023-08-31 16:00:25 CST; 11ms ago Docs: https://kubernetes.io/docs/ Main PID: 3011 (kubelet) CGroup: /system.slice/kubelet.service └─3011 /usr/bin/kubelet --bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf --config=/var/lib/...

初始化集群配置

-

- 拉取必要镜像 Daocloud-镜像源

- 确认现有版本 kubeadm、kubelet 所需要的镜像版本 [root@k8s-master ~]# kubeadm config images list registry.k8s.io/kube-apiserver:v1.28.1 registry.k8s.io/kube-controller-manager:v1.28.1 registry.k8s.io/kube-scheduler:v1.28.1 registry.k8s.io/kube-proxy:v1.28.1 registry.k8s.io/pause:3.9 registry.k8s.io/etcd:3.5.9-0 registry.k8s.io/coredns/coredns:v1.10.1 - 拉取指定的镜像版本(Daocloud源) [root@k8s-master ~]# kubeadm config images pull --image-repository k8s.m.daocloud.io --kubernetes-version v1.28.1 [config/images] Pulled k8s.m.daocloud.io/kube-apiserver:v1.28.1 [config/images] Pulled k8s.m.daocloud.io/kube-controller-manager:v1.28.1 [config/images] Pulled k8s.m.daocloud.io/kube-scheduler:v1.28.1 [config/images] Pulled k8s.m.daocloud.io/kube-proxy:v1.28.1 [config/images] Pulled k8s.m.daocloud.io/pause:3.9 [config/images] Pulled k8s.m.daocloud.io/etcd:3.5.9-0 [config/images] Pulled k8s.m.daocloud.io/coredns:v1.10.1 - 拉取指定镜像版本() [root@k8s-master ~]# kubeadm config images pull --image-repository registry.aliyuncs.com/google_containers --kubernetes-version v1.28.1 [config/images] Pulled registry.aliyuncs.com/google_containers/kube-apiserver:v1.28.1 [config/images] Pulled registry.aliyuncs.com/google_containers/kube-controller-manager:v1.28.1 [config/images] Pulled registry.aliyuncs.com/google_containers/kube-scheduler:v1.28.1 [config/images] Pulled registry.aliyuncs.com/google_containers/kube-proxy:v1.28.1 [config/images] Pulled registry.aliyuncs.com/google_containers/pause:3.9 [config/images] Pulled registry.aliyuncs.com/google_containers/etcd:3.5.9-0 [config/images] Pulled registry.aliyuncs.com/google_containers/coredns:v1.10.1 -

生成初始化集群配置文件 kubeadm init kubelet

- 打印一个默认的集群配置文件 [root@k8s-master ~]# kubeadm config print init-defaults - 打印一个默认的集群配置文件 - 关于 kubelet 默认配置 kubeadm config print init-defaults --component-configs KubeletConfiguration# clusterConfigfile apiVersion: kubeadm.k8s.io/v1beta3 bootstrapTokens: - groups: - system:bootstrappers:kubeadm:default-node-token token: abcdef.0123456789abcdef ttl: 24h0m0s usages: - signing - authentication kind: InitConfiguration localAPIEndpoint: advertiseAddress: 10.2x.20x.6x # 更改为节点 ip bindPort: 6443 nodeRegistration: criSocket: unix:///var/run/containerd/containerd.sock imagePullPolicy: IfNotPresent name: k8s-master # 更改为节点主机名 taints: null --- apiServer: timeoutForControlPlane: 4m0s # kubeadm install 集群时的超市时间 apiVersion: kubeadm.k8s.io/v1beta3 certificatesDir: /etc/kubernetes/pki clusterName: kubernetes controllerManager: {} dns: {} etcd: local: dataDir: /var/lib/etcd imageRepository: k8s.m.daocloud.io # 更改为 k8s.m.daocloud.io,默认 registry.k8s.io kind: ClusterConfiguration kubernetesVersion: 1.28.1 # 修改 k8s 版本 networking: dnsDomain: cluster.local podSubnet: 172.16.15.0/22 # 集群的 pod ip 段,冲突的话需要更改 serviceSubnet: 10.96.0.0/12 # 集群的 service ip 段,冲突的话需要更改 scheduler: {} --- kind: KubeletConfiguration apiVersion: kubelet.config.k8s.io/v1beta1 cgroupDriver: systemd # 与系统和 containerd 使用一致的 cgroup 驱动

部署集群

-

使用

mawb-ClusterConfig.yaml安装集群[root@k8s-master ~]# kubeadm init --config mawb-ClusterConfig.yaml [init] Using Kubernetes version: v1.28.1 [preflight] Running pre-flight checks [preflight] Pulling images required for setting up a Kubernetes cluster [preflight] This might take a minute or two, depending on the speed of your internet connection [preflight] You can also perform this action in beforehand using 'kubeadm config images pull' W0831 17:52:06.298929 9686 checks.go:835] detected that the sandbox image "registry.k8s.io/pause:3.8" of the container runtime is inconsistent with that used by kubeadm. It is recommended that using "k8s.m.daocloud.io/pause:3.9" as the CRI sandbox image. [certs] Using certificateDir folder "/etc/kubernetes/pki" [certs] Generating "ca" certificate and key [certs] Generating "apiserver" certificate and key [certs] apiserver serving cert is signed for DNS names [k8s-master kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 10.2x.2x9.6x] [certs] Generating "apiserver-kubelet-client" certificate and key [certs] Generating "front-proxy-ca" certificate and key [certs] Generating "front-proxy-client" certificate and key [certs] Generating "etcd/ca" certificate and key [certs] Generating "etcd/server" certificate and key [certs] etcd/server serving cert is signed for DNS names [k8s-master localhost] and IPs [10.2x.2x9.6x 127.0.0.1 ::1] [certs] Generating "etcd/peer" certificate and key [certs] etcd/peer serving cert is signed for DNS names [k8s-master localhost] and IPs [10.2x.2x9.6x 127.0.0.1 ::1] [certs] Generating "etcd/healthcheck-client" certificate and key [certs] Generating "apiserver-etcd-client" certificate and key [certs] Generating "sa" key and public key [kubeconfig] Using kubeconfig folder "/etc/kubernetes" [kubeconfig] Writing "admin.conf" kubeconfig file [kubeconfig] Writing "kubelet.conf" kubeconfig file [kubeconfig] Writing "controller-manager.conf" kubeconfig file [kubeconfig] Writing "scheduler.conf" kubeconfig file [etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests" [control-plane] Using manifest folder "/etc/kubernetes/manifests" [control-plane] Creating static Pod manifest for "kube-apiserver" [control-plane] Creating static Pod manifest for "kube-controller-manager" [control-plane] Creating static Pod manifest for "kube-scheduler" [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Starting the kubelet [wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s [kubelet-check] Initial timeout of 40s passed. [apiclient] All control plane components are healthy after 10.507981 seconds I0831 20:19:17.452642 9052 uploadconfig.go:112] [upload-config] Uploading the kubeadm ClusterConfiguration to a ConfigMap [upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace I0831 20:19:17.498585 9052 uploadconfig.go:126] [upload-config] Uploading the kubelet component config to a ConfigMap [kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster I0831 20:19:17.536230 9052 uploadconfig.go:131] [upload-config] Preserving the CRISocket information for the control-plane node I0831 20:19:17.536386 9052 patchnode.go:31] [patchnode] Uploading the CRI Socket information "unix:///var/run/containerd/containerd.sock" to the Node API object "k8s-master" as an annotation [upload-certs] Skipping phase. Please see --upload-certs [mark-control-plane] Marking the node k8s-master as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers] [mark-control-plane] Marking the node k8s-master as control-plane by adding the taints [node-role.kubernetes.io/control-plane:NoSchedule] [bootstrap-token] Using token: abcdef.0123456789abcdef [bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles [bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes [bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials [bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token [bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster [bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace I0831 20:19:19.159977 9052 clusterinfo.go:47] [bootstrap-token] loading admin kubeconfig I0831 20:19:19.160881 9052 clusterinfo.go:58] [bootstrap-token] copying the cluster from admin.conf to the bootstrap kubeconfig I0831 20:19:19.161567 9052 clusterinfo.go:70] [bootstrap-token] creating/updating ConfigMap in kube-public namespace I0831 20:19:19.182519 9052 clusterinfo.go:84] creating the RBAC rules for exposing the cluster-info ConfigMap in the kube-public namespace I0831 20:19:19.209727 9052 kubeletfinalize.go:90] [kubelet-finalize] Assuming that kubelet client certificate rotation is enabled: found "/var/lib/kubelet/pki/kubelet-client-current.pem" [kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key I0831 20:19:19.215469 9052 kubeletfinalize.go:134] [kubelet-finalize] Restarting the kubelet to enable client certificate rotation [addons] Applied essential addon: CoreDNS [addons] Applied essential addon: kube-proxy Your Kubernetes control-plane has initialized successfully! To start using your cluster, you need to run the following as a regular user: #当前用户执行,使 kubectl 可以访问/管理集群 mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config Alternatively, if you are the root user, you can run: export KUBECONFIG=/etc/kubernetes/admin.conf You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ Then you can join any number of worker nodes by running the following on each as root: kubeadm join 10.2x.20x.6x:6443 --token abcdef.0123456789abcdef \ --discovery-token-ca-cert-hash sha256:3c96533e9c86dcb7fc4b1998716bff804685ef6d40a6635e3357cb92eb4645ed -

配置 kubectl client 使其可以访问、管理集群

To start using your cluster, you need to run the following as a regular user: #当前用户执行,使 kubectl 可以访问/管理集群 mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config Alternatively, if you are the root user, you can run: export KUBECONFIG=/etc/kubernetes/admin.conf

接入 worker 节点

-

cri 安装请参考上面步骤

-

kubelet 安装请参考上面步骤

-

节点接入

[root@k8s-worker01 ~]# kubeadm join 10.2x.20x.6x:6443 --token abcdef.0123456789abcdef \ > --discovery-token-ca-cert-hash sha256:3c96533e9c86dcb7fc4b1998716bff804685ef6d40a6635e3357cb92eb4645ed [preflight] Running pre-flight checks [preflight] Reading configuration from the cluster... [preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml' [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Starting the kubelet [kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap... This node has joined the cluster: * Certificate signing request was sent to apiserver and a response was received. * The Kubelet was informed of the new secure connection details. Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

安装网络插件calico calicoctl

-

⚠️:修改

custom-resources.yamlcidr: 172.16.15.0/22 跟cluster podsubnet一致- 安装 crd kubectl create -f https://raw.githubusercontent.com/projectcalico/calico/v3.26.1/manifests/tigera-operator.yaml - 修改 image 地址 kubectl edit deployment -n tigera-operator tigera-operator quay.m.daocloud.io/tigera/operator:v1.30.4 - 节点中也要确保可以 pull pause 镜像 ctr image pull k8s.m.daocloud.io/pause:3.9 - 安装 calico kubectl create -f https://raw.githubusercontent.com/projectcalico/calico/v3.26.1/manifests/custom-resources.yaml installation.operator.tigera.io/default created apiserver.operator.tigera.io/default created - 检查 calico 组件状态 [root@k8s-master ~]# kubectl get pod -A NAMESPACE NAME READY STATUS RESTARTS AGE calico-apiserver calico-apiserver-9bc7d894-5l6m7 1/1 Running 0 2m28s calico-apiserver calico-apiserver-9bc7d894-v7jjm 1/1 Running 0 2m28s calico-system calico-kube-controllers-f44dcdd85-kgfwn 1/1 Running 0 10m calico-system calico-node-655zj 1/1 Running 0 10m calico-system calico-node-8qplv 1/1 Running 0 10m calico-system calico-typha-dd7d8479d-xgb7v 1/1 Running 0 10m calico-system csi-node-driver-cv5sx 2/2 Running 0 10m calico-system csi-node-driver-pd2v7 2/2 Running 0 10m kube-system coredns-56bd89c8d6-d4sgh 1/1 Running 0 15h kube-system coredns-56bd89c8d6-qjfm6 1/1 Running 0 15h kube-system etcd-k8s-master 1/1 Running 0 15h kube-system kube-apiserver-k8s-master 1/1 Running 0 15h kube-system kube-controller-manager-k8s-master 1/1 Running 0 15h kube-system kube-proxy-nqx46 1/1 Running 0 68m kube-system kube-proxy-q6m9r 1/1 Running 0 15h kube-system kube-scheduler-k8s-master 1/1 Running 0 15h tigera-operator tigera-operator-56d54674b6-lbzzf 1/1 Running 1 (30m ago) 36m - 安装 calicoctl as a kubectl plugin on a single host [root@k8s-master01 ~]# curl -L https://github.com/projectcalico/calico/releases/download/v3.26.4/calicoctl-linux-amd64 -o kubectl-calico [root@k8s-master01 ~]# chmod +x kubectl-calico [root@k8s-master01 ~]# kubectl calico -h [root@k8s-master01 ~]# mv kubectl-calico /usr/local/bin/ [root@k8s-master01 ~]# kubectl calico -h Usage: kubectl-calico [options] <command> [<args>...] create Create a resource by file, directory or stdin. replace Replace a resource by file, directory or stdin. apply Apply a resource by file, directory or stdin. This creates a resource if it does not exist, and replaces a resource if it does exists. patch Patch a pre-exisiting resource in place. delete Delete a resource identified by file, directory, stdin or resource type and name. get Get a resource identified by file, directory, stdin or resource type and name. label Add or update labels of resources. convert Convert config files between different API versions. ipam IP address management. node Calico node management. version Display the version of this binary. datastore Calico datastore management. Options: -h --help Show this screen. -l --log-level=<level> Set the log level (one of panic, fatal, error, warn, info, debug) [default: panic] --context=<context> The name of the kubeconfig context to use. --allow-version-mismatch Allow client and cluster versions mismatch. Description: The calico kubectl plugin is used to manage Calico network and security policy, to view and manage endpoint configuration, and to manage a Calico node instance. See 'kubectl-calico <command> --help' to read about a specific subcommand. - 安装 helm 组件 [root@k8s-master01 ~]# twget https://get.helm.sh/helm-v3.13.3-linux-amd64.tar.gz [root@k8s-master01 ~]# tar -zxvf helm-v3.13.3-linux-amd64.tar.gz linux-amd64/ linux-amd64/LICENSE linux-amd64/README.md linux-amd64/helm [root@k8s-master01 ~]# mv linux-amd64/helm /usr/local/bin/helmmv linux-amd64/helm /usr/local/bin/helm^C [root@k8s-master01 ~]# mv linux-amd64/helm /usr/local/bin/helm

client 工具使用与优化

-

containerd 自带

ctr cli工具- containerd 运行时工具 ctr ctr -n k8s.io images export hangzhou_pause:3.4.1.tar.gz registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.4.1 ctr -n k8s.io images import hangzhou_pause:3.4.1.tar.gz ctr -n k8s.io images list -

k8s 社区维护的

crictl工具- crictl 工具 vi /etc/crictl.yaml #请根据实际情况进行更改 runtime-endpoint: unix:///var/run/containerd/containerd.sock image-endpoint: unix:///var/run/containerd/containerd.sock timeout: 10 -

nerdctl 安装

- nerdctl 工具 [root@k8s-master01 ~]# wget https://github.com/containerd/nerdctl/releases/download/v1.7.2/nerdctl-1.7.2-linux-amd64.tar.gz [root@k8s-master01 ~]# tar Cxzvvf /usr/local/bin nerdctl-1.7.2-linux-amd64.tar.gz -rwxr-xr-x root/root 24838144 2023-12-12 19:00 nerdctl -rwxr-xr-x root/root 21618 2023-12-12 18:59 containerd-rootless-setuptool.sh -rwxr-xr-x root/root 7187 2023-12-12 18:59 containerd-rootless.sh - nerdctl 报错 [root@k8s-master01 pig-register]# nerdctl build -t 10.10.1.75:5000:/pig/pig-registry:latest . ERRO[0000] `buildctl` needs to be installed and `buildkitd` needs to be running, see https://github.com/moby/buildkit error="failed to ping to host unix:///run/buildkit-default/buildkitd.sock: exec: \"buildctl\": executable file not found in $PATH\nfailed to ping to host unix:///run/buildkit/buildkitd.sock: exec: \"buildctl\": executable file not found in $PATH" FATA[0000] no buildkit host is available, tried 2 candidates: failed to ping to host unix:///run/buildkit-default/buildkitd.sock: exec: "buildctl": executable file not found in $PATH failed to ping to host unix:///run/buildkit/buildkitd.sock: exec: "buildctl": executable file not found in $PATH - 安装 buildkit(支持 runc 和 containerd 及 非 root 运行) [root@k8s-master01 ~]# wget https://github.com/moby/buildkit/releases/download/v0.12.4/buildkit-v0.12.4.linux-amd64.tar.gz [root@k8s-master01 ~]# tar Cxzvvf /usr/local/ buildkit-v0.12.4.linux-amd64.tar.gz [root@k8s-master01 ~]# vi /usr/lib/systemd/system/buildkit.service [Unit] Description=BuildKit Requires=buildkit.socket After=buildkit.socket Documentation=https://github.com/moby/buildkit [Service] Type=notify ExecStart=/usr/local/bin/buildkitd --addr fd:// [Install] WantedBy=multi-user.target [root@k8s-master01 ~]# vi /usr/lib/systemd/system/buildkit.socket [Unit] Description=BuildKit Documentation=https://github.com/moby/buildkit [Socket] ListenStream=%t/buildkit/buildkitd.sock SocketMode=0660 [Install] WantedBy=sockets.target [root@k8s-master01 ~]# systemctl daemon-reload && systemctl restart buildkitd.service - 构建镜像 [root@k8s-master01 pig-register]# nerdctl build -t pig-registry:latest -f Dockerfile . [+] Building 14.9s (4/7) => [internal] load build definition from Dockerfile 0.0s => => transferring dockerfile: 384B 0.0s => [internal] load metadata for docker.io/alibabadragonwell/dragonwell:17-anolis 2.8s => [internal] load .dockerignore 0.0s => => transferring context: 2B 0.0s => [1/3] FROM docker.io/alibabadragonwell/dragonwell:17-anolis@sha256:2d31fb3915436ed9f15b4cda936d233419a45a8e35c696d324d6ceadab3d30cc 12.1s => => resolve docker.io/alibabadragonwell/dragonwell:17-anolis@sha256:2d31fb3915436ed9f15b4cda936d233419a45a8e35c696d324d6ceadab3d30cc 0.0s => => sha256:4f4c4e7d1e14aae42ec5ae94c49838c5be9130315ae3e41d56a8670383c0a727 18.39MB / 18.39MB 4.9s => => sha256:1eb01ecdf1afb7abdb39b396b27330b94d3f6b571d766986e2d91916fedad4d1 126B / 126B 1.0s => => sha256:54273d8675f329a1fbcaa73525f4338987bd8e81ba06b9ba72ed9ca63246c834 58.72MB / 82.82MB 12.0s => => sha256:2c5d3d4cbdcb00ce7c1aec91f65faeec72e3676dcc042131f7ec744c371ada32 26.21MB / 193.44MB 12.0s => [internal] load build context 1.1s => => transferring context: 160.19MB 1.1s -

kubectl 自动补全

# 节点需要安装 bash-completion、节点初始化配置已包含 source <(kubectl completion bash) echo "source <(kubectl completion bash)" >> ~/.bashrc

附录

-

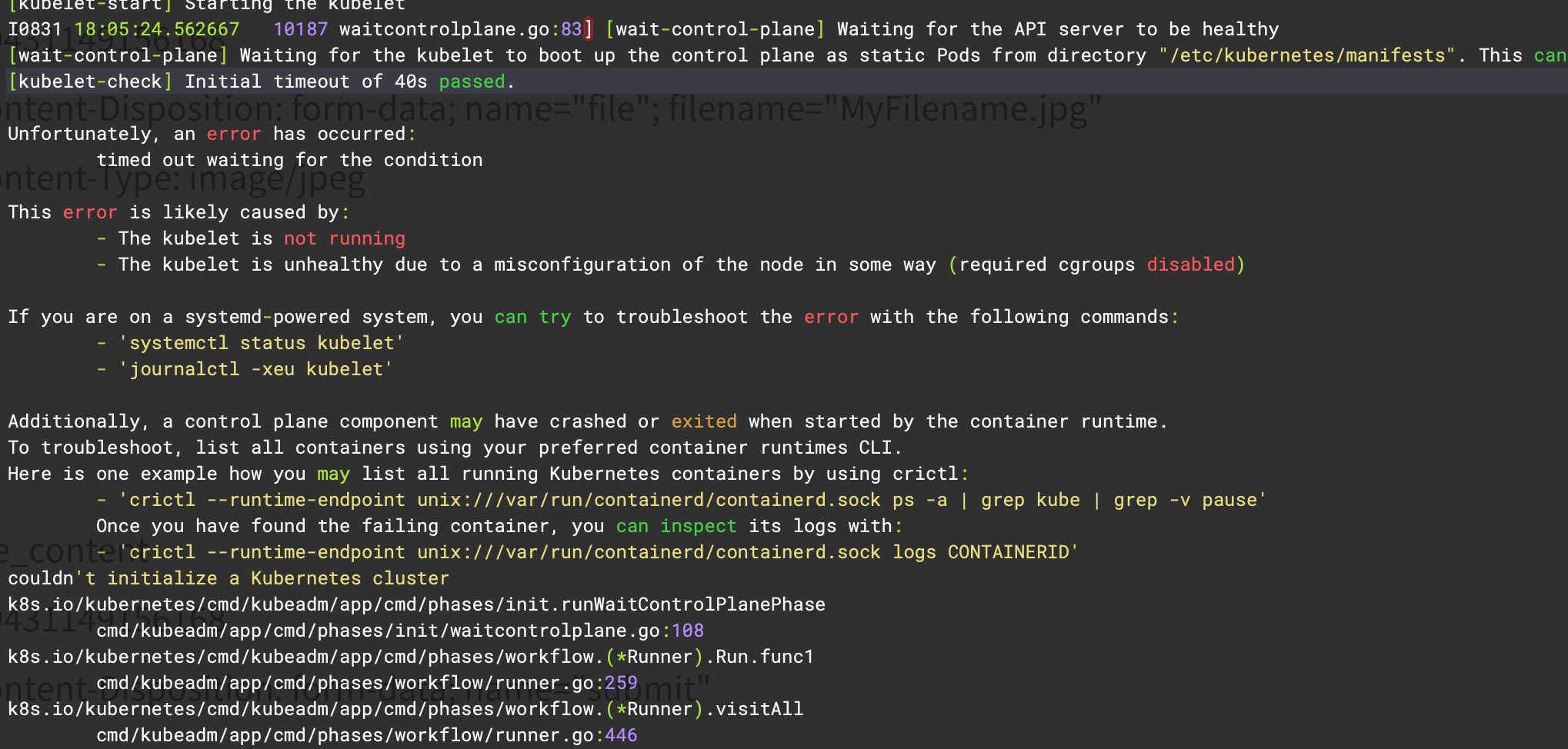

kubeadm init 过程中报错

Unfortunately, an error has occurred: timed out waiting for the condition

-

现象

- 🈚️任何 pod 创建,kubelet、containerd 没有任何日志且服务运行正常

- 以为是超时导致,更改了 init 时使用的集群配置文件

timeoutForControlPlane: 10m0s无效

-

解决

- 重启节点后,init 集群成功 # 怀疑时 selinux 配置导致的

-

Ingress-nginx

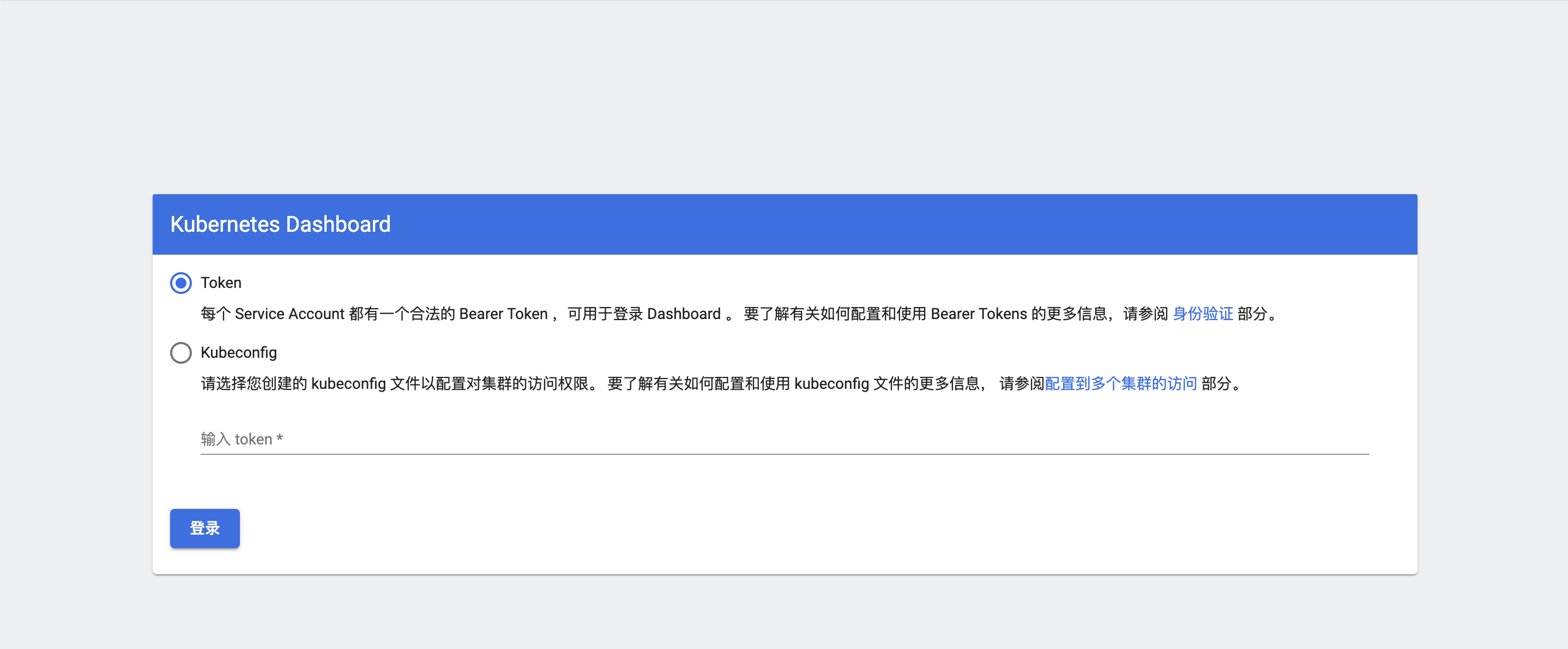

安装 dashabord

[root@controller-node-1 ~]# kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.7.0/aio/deploy/recommended.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

检查状态

[root@controller-node-1 ~]# kubectl get pod -n kubernetes-dashboard

NAME READY STATUS RESTARTS AGE

dashboard-metrics-scraper-7bc864c59-lwlnf 0/1 ContainerCreating 0 10s

kubernetes-dashboard-6c7ccbcf87-55jln 0/1 ContainerCreating 0 10s

[root@controller-node-1 ~]# kubectl get pod -n kubernetes-dashboard

NAME READY STATUS RESTARTS AGE

dashboard-metrics-scraper-7bc864c59-lwlnf 1/1 Running 0 76s

kubernetes-dashboard-6c7ccbcf87-55jln 1/1 Running 0 76s

使用 nodeport/ingress 暴露出来

[root@controller-node-1 ~]# kubectl get svc -n kubernetes-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dashboard-metrics-scraper ClusterIP 10.233.52.164 <none> 8000/TCP 2m52s

kubernetes-dashboard ClusterIP 10.233.18.137 <none> 443/TCP 2m52s

[root@controller-node-1 ~]# kubectl edit svc -n kubernetes-dashboard kubernetes-dashboard

apiVersion: v1

kind: Service

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","kind":"Service","metadata":{"annotations":{},"labels":{"k8s-app":"kubernetes-dashboard"},"name":"kubernetes-dashboard","namespace":"kubernetes-dashboard"},"spec":{"ports":[{"port":443,"targetPort":8443}],"selector":{"k8s-app":"kubernetes-dashboard"}}}

creationTimestamp: "2023-12-12T07:58:58Z"

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

resourceVersion: "18237823"

uid: 4d121aa7-791e-4f31-98f9-0b33d8b91d09

spec:

clusterIP: 10.233.18.137

clusterIPs:

- 10.233.18.137

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

ports:

- port: 443

protocol: TCP

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

sessionAffinity: None

type: NodePort

status:

loadBalancer: {}

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

annotations: ""

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"networking.k8s.io/v1","kind":"Ingress","metadata":{"annotations":{},"name":"kubernetes-dashboard-ingress","namespace":"kubernetes-dashboard"},"spec":{"ingressClassName":"nginx","rules":[{"host":"mawb.kubernetes.com","http":{"paths":[{"backend":{"service":{"name":"kubernetes-dashboard","port":{"number":443}}},"path":"/","pathType":"Prefix"}]}}]}}

nginx.ingress.kubernetes.io/backend-protocol: HTTPS

creationTimestamp: "2023-12-16T09:17:13Z"

generation: 2

name: kubernetes-dashboard-ingress

namespace: kubernetes-dashboard

resourceVersion: "21457"

uid: 78e57486-96d1-4496-a517-f8382bc53cbf

spec:

ingressClassName: nginx

rules:

- host: mawb.kubernetes.com

http:

paths:

- backend:

service:

name: kubernetes-dashboard

port:

number: 443

path: /

pathType: Prefix

status:

loadBalancer: {}

访问

https://10.29.17.83:32764/#/login

创建 admin 用户

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin

namespace: kubernetes-dashboard

---- 创建 token

[root@controller-node-1 ~]# kubectl -n kubernetes-dashboard create token admin

eyJhbGciOiJSUzI1NiIsImtpZCI6IndhQTNza3VaX0dtTmpOQU84TVNyaDRCaGlRNUVIbmVIXzNzVVc3VnVDaWsifQ.eyJhdWQiOlsiaHR0cHM6Ly9rdWJlcm5ldGVzLmRlZmF1bHQuc3ZjLmNsdXN0ZXIubG9jYWwiXSwiZXhwIjoxNzAyMzcyMTQ5LCJpYXQiOjE3MDIzNjg1NDksImlzcyI6Imh0dHBzOi8va3ViZXJuZXRlcy5kZWZhdWx0LnN2Yy5jbHVzdGVyLmxvY2FsIiwia3ViZXJuZXRlcy5pbyI6eyJuYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsInNlcnZpY2VhY2NvdW50Ijp7Im5hbWUiOiJhZG1pbiIsInVpZCI6ImIyNGMyZDc0LTkyMTMtNGMyZi04YmQyLWRiMWY3NzdjMzgzMSJ9fSwibmJmIjoxNzAyMzY4NTQ5LCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6YWRtaW4ifQ.onk5Pu6dieQUvi57mQ18_T942eUHZnE7viqUAklE_Ol3n8dv3uCoP6ieUal98NMEEtjfMinU4Kgn2d9iKnBB0wH8dwLSqawM114Y7GzhndX2XlCQKp55umb3v9wVyfYguqC3Q3F2nV23VjvLzuLoUXLeVU7jrkLEj1wPc7uRk1jMAoCqP5sSZBPFhcO2SduJBWZSEvkq3xJDK_nFYW37wNI3Zs3dglCzAN2RYC_cDjL6u6Mmzys3mFV9vCD41EkOkcAOcvXIkkVEFM52yDBG44LPSCZrYtJLFMwCEsmAisn8UZxBhDKOGebewyyh-cU1bCd8-QYaJWoY5GPQI1y9kQ

---- 创建带时间戳的 token

[root@controller-node-1 ~]# kubectl -n kubernetes-dashboard create token admin --duration 3600m

eyJhbGciOiJSUzI1NiIsImtpZCI6IndhQTNza3VaX0dtTmpOQU84TVNyaDRCaGlRNUVIbmVIXzNzVVc3VnVDaWsifQ.eyJhdWQiOlsiaHR0cHM6Ly9rdWJlcm5ldGVzLmRlZmF1bHQuc3ZjLmNsdXN0ZXIubG9jYWwiXSwiZXhwIjoxNzAyNjQ2OTc5LCJpYXQiOjE3MDI0MzA5NzksImlzcyI6Imh0dHBzOi8va3ViZXJuZXRlcy5kZWZhdWx0LnN2Yy5jbHVzdGVyLmxvY2FsIiwia3ViZXJuZXRlcy5pbyI6eyJuYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsInNlcnZpY2VhY2NvdW50Ijp7Im5hbWUiOiJhZG1pbiIsInVpZCI6ImIyNGMyZDc0LTkyMTMtNGMyZi04YmQyLWRiMWY3NzdjMzgzMSJ9fSwibmJmIjoxNzAyNDMwOTc5LCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6YWRtaW4ifQ.XzEqLRNDq2lHE1M_3wRhQTt0V1JgsoKE_iSFASyfDqEkuMa7V8l4YXKWoD-7TAevfObVHavGR19nTqQ8DPQ2jD3MfJGxpEZMkJ3A8HdQ1KLEwf-A_9kbh5gBviNdukhFspLtx7n9P1T9a3otztVwQTf6RqP-VAdBB-6iZfKXzf-zK7jUAbS7eAR--LJ1Wx5lukcUSguyHnZ0kHS1hRH6rUfxeaNLF3Tuk5W4clfPmufOnRT2ocWmfkvyEE5SSJQJd_odludGjx6Yu-ZB5t5OM1AsQDCNNQ7fNxYRdrDWRJCDkBg5UILMjSULCZ2k--VZXTmtxZAPm51j3y4pmt3Yqw

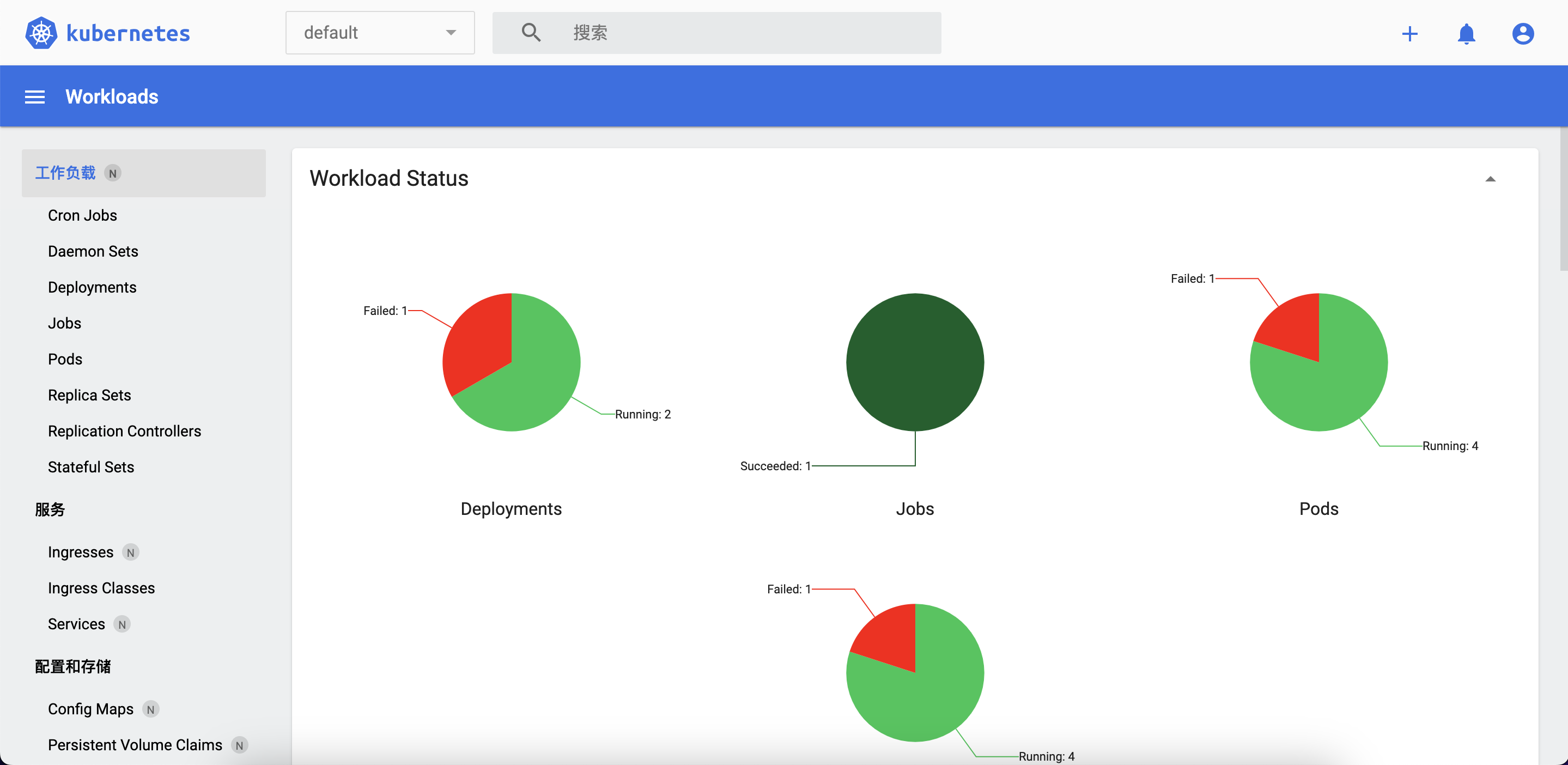

再次登录 UI

开源 UI 调研

- kubeapps:看起来是管理 helm-chart 的一个页面工具,来方便应用的部署和升级,调研下是否有中文界面,使用上需要用户学习 helm 打包,难度中级、页面风格有点类似 vmware 的登录首页

- octant:vmware 开源的 dashboard 页面,和原生提供的页面类似,界面简洁、资源配置丰富,重点调研下

安装神器

- kubean:daocloud 公司开源的一个集群生命周期管理工具

- kubeesz:github 社区挺活跃的

- kubespary:k8s 维护的一个工具,国内大多都是套壳这个

参考文件

CronJob、Job 资源示例

apiVersion: batch/v1

kind: CronJob

metadata:

name: hello

spec:

suspend: true # 挂起 cronjob,不会影响已经开始的任务

schedule: "* * * * *" # 每分钟执行一次

successfulJobsHistoryLimit: 2 # 保留历史成功数量

failedJobsHistoryLimit: 3 # 保留历史失败数量

jobTemplate: # kind: job

spec:

suspend: true # 挂起 job (true 时运行中 pod 被终止,恢复时 activeDeadlineSeconds 也会重新计时)

completions: 5 # 完成次数 (default: 1)

parallelism: 2 # 并行个数 (default: 1)

backoffLimit: 2 # 重试次数 (default : 1 重启时间按指数曾长<10、20、30>.最多6m)

activeDeadlineSeconds: 20 # job 最大的生命周期,时间到停止所有相关 pod (Job 状态更新为 type: Failed、reason: DeadlineExceeded)

template:

spec:

containers:

- name: hello

image: busybox:1.28

imagePullPolicy: IfNotPresent

command:

- /bin/sh

- -c

- date; echo Hello from the Kubernetes cluster

restartPolicy: OnFailure # 控制 pod 异常时的动作,重启、异常重启、从不重启

- 有关时区问题如果 cronjob 没有明确指定,那么就按照 kube-controller-manger 指定的时区

探针使用

探针交互

- 容器中配置探针后,kubelet 会按指定配置对 pod 进行健康检查

探针种类

- 存活探针:决定什么时候重启 容器。如:pod 运行正常,但容器内进程启动时需要的依赖条件异常<db、nfs> 导致启动时夯住。

- 就绪探针:决定是否将 pod 相关 service 的 endpoint 摘除。容器运行且进程启动正常才算就绪

- 启动探针:决定容器的启动机制,以及容器启动后进行存活探针/就绪探针的检查。如容器启动耗时较长

探针检查结果

- Success:通过检查

- Failure:未通过检查

- Unknown:探测失败,不会采取任何行动

探针编写层级

- Pod: .spec.contaiers.livenessProbe

- Pod: .spec.contaiers.readinessProbe

- Pod: .spec.contaiers.startupProbe

探针检查方法

-

exec:相当于 command / shell 进行检查,支持 initialDelaySeconds / periodSeconds

apiVersion: v1 kind: Pod metadata: labels: test: liveness name: liveness-exec spec: containers: - name: liveness image: registry.k8s.io/busybox args: - /bin/sh - -c - touch /tmp/healthy; sleep 30; rm -f /tmp/healthy; sleep 600 livenessProbe: exec: command: - cat - /tmp/healthy initialDelaySeconds: 5 periodSeconds: 5 -

httpGet:相当于 http 请求,支持 initialDelaySeconds / periodSeconds / Headers / port: .ports.name

apiVersion: v1 kind: Pod metadata: labels: test: liveness name: liveness-http spec: containers: - name: liveness image: registry.k8s.io/liveness args: - /server livenessProbe: httpGet: path: /healthz port: 8080 httpHeaders: - name: Custom-Header value: Awesome initialDelaySeconds: 3 periodSeconds: 3 -

tcpSocket: 相当于 telnet 某个端口(kubelet 建立套接字链接),支持 initialDelaySeconds / periodSeconds / Headers / port: .spec.containers.ports.name

apiVersion: v1 kind: Pod metadata: name: goproxy labels: app: goproxy spec: containers: - name: goproxy image: registry.k8s.io/goproxy:0.1 ports: - containerPort: 8080 readinessProbe: tcpSocket: port: 8080 initialDelaySeconds: 5 periodSeconds: 10 livenessProbe: tcpSocket: port: 8080 initialDelaySeconds: 15 periodSeconds: 20 --- port 支持使用 ports.name ports: - name: liveness-port containerPort: 8080 hostPort: 8080 livenessProbe: httpGet: path: /healthz port: liveness-port -

grpc:首先应用要支持该方法,且 grpc 的话,pod 中必须要定义 .spec.containers.ports 字段

apiVersion: v1 kind: Pod metadata: name: etcd-with-grpc spec: containers: - name: etcd image: registry.k8s.io/etcd:3.5.1-0 command: [ "/usr/local/bin/etcd", "--data-dir", "/var/lib/etcd", "--listen-client-urls", "http://0.0.0.0:2379", "--advertise-client-urls", "http://127.0.0.1:2379", "--log-level", "debug"] ports: - containerPort: 2379 livenessProbe: grpc: port: 2379 initialDelaySeconds: 10 -

启动探针、存活探针使用

ports: - name: liveness-port containerPort: 8080 hostPort: 8080 livenessProbe: httpGet: path: /healthz port: liveness-port failureThreshold: 1 periodSeconds: 10 startupProbe: httpGet: path: /healthz port: liveness-port failureThreshold: 30 #次数 periodSeconds: 10 - startupProbe 检查通过时,才会执行所配置的存活探针和就绪探针。 - startupProbe 探测配置建议:failureThreshold * periodSeconds(案例是5m,后执行就绪检查)

探针配置

Pod 生命周期

ConfigMaps

ConfigMap 是什么?

- 非 🔐 数据以

key:value的形式保存 - Pods 可以将其用作环境变量、命令行参数或者存储卷中的配置文件

- 将你的环境配置信息和 容器镜像 进行解耦,便于应用配置的修改及多云场景下应用的部署

- 与 ConfigMap 所对应的就是 Secret (加密数据)

ConfigMap 的特性

- 名字必须是一个合法的 DNS 子域名

data或binaryData字段下面的键名称必须由字母数字字符或者-、_或.组成、键名不可有重叠- v1.19 开始,可以添加

immutable字段到 ConfigMap 定义中,来创建 不可变更的 ConfigMap - ConfigMap 需要跟引用它的资源在同一命名空间下

- ConfigMap 更新新,应用会自动更新,kubelet 会定期检索配置是否最新

- SubPath 卷挂载的容器将不会收到 ConfigMap 的更新,需要重启应用

如何使用 ConfigMap

-

创建一个 ConfigMap 资源或者使用现有的 ConfigMap,多个 Pod 可以引用同一个 ConfigMap 资源

-

修改 Pod 定义,在

spec.volumes[]下添加一个卷。 为该卷设置任意名称,之后将 -

为每个需要该 ConfigMap 的容器添加一个 volumeMount

-

设置

.spec.containers[].volumeMounts[].name定义卷挂载点的名称 -

设置

.spec.containers[].volumeMounts[].readOnly=true -

设置

.spec.containers[].volumeMounts[].mountPath定义一个未使用的目录

-

-

更改你的 Yaml 或者命令行,以便程序能够从该目录中查找文件。ConfigMap 中的每个

data键会变成mountPath下面的一个文件名

场景

基于文件创建 ConfigMap

使用 kubectl create configmap 基于单个文件或多个文件创建 ConfigMap

# 文件如下:

[root@master01 ~]# cat /etc/resolv.conf

nameserveer 1.1.1.1

# 创建 ConfigMap

[root@master01 ~]# kubectl create configmap dnsconfig --from-file=resolve.conf

[root@master01 ~]# kubectl get configmap dnsconfig -o yaml

apiVersion: v1

data:

resolve.conf: |

nameserveer 1.1.1.1

kind: ConfigMap

metadata:

name: dnsconfig

namespace: default

Deployment 使用所创建的 ConfigMap 资源 Configure a Pod to Use a ConfigMap

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: dao-2048-2-test

name: dao-2048-2-test-dao-2048

namespace: default

spec:

replicas: 1

selector:

matchLabels:

component: dao-2048-2-test-dao-2048

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

template:

metadata:

labels:

app: dao-2048-2-test

name: dao-2048-2-test-dao-2048

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

- key: kubernetes.io/arch

operator: In

values:

- amd64

containers:

- image: x.x.x.x/dao-2048/dao-2048:latest

imagePullPolicy: Always

name: dao-2048-2-test-dao-2048

resources:

limits:

cpu: 100m

memory: "104857600"

requests:

cpu: 100m

memory: "104857600"

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /etc/resolv.conf

name: configmap-dns

subPath: resolv.conf

dnsConfig:

nameservers:

- 192.0.2.1

dnsPolicy: None

imagePullSecrets:

- name: dao-2048-2-test-dao-2048-10.29.140.12

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

volumes:

- configMap:

defaultMode: 420

items:

- key: resolve.conf

path: resolv.conf

name: dnsconfig

name: configmap-dns

配置说明

# volumeMounts

volumeMounts:

- mountPath: /etc/resolv.conf # 定义容器内挂载路径

name: configmap-dns # 定义卷挂载点名称,以便 volumes 使用该名称挂载 configmap 资源

subPath: resolv.conf # 指定所引用的卷内的子文件/子路径,而不是其根路径

# volumes

volumes:

- name: configmap-dns

configMap:

name: dnsconfig # 引用所创建的 configmap 资源 dnsconfig

defaultMode: 420

items: # 引用对应的 key,将其创建问文件

- key: resolve.conf # .data.resolve.conf

path: resolv.conf # 将 resolve.conf `key` 创建成 resolv.conf 文件

疑问 (为什么使用了 dnsConfig 的前提下,又将 resolv.conf 以 configmap 的形式注入容器中呢)

- 做测试,看 k8s 下以哪个配置生效,结果是 configmap 的形 会覆盖 yaml 定义的 dnsConfig 配置

- 在多云场景中,需要区分出应用配置的差异化,所以才考虑使用 configmap 的形式实现,在单一环境中推荐在 yaml 中直接定义 dnsCofnig

dnsPolicy: None

dnsConfig:

nameservers:

- 192.0.2.1

dnsPolicy: None

一次 kubelet PLEG is not healthy 报错事项

排查记录

-

现象:

-

节点 PLEG is not healthy 报错

Jul 03 19:59:01 xxx-worker-004 kubelet[1946644]: E0703 19:59:01.292918 1946644 kubelet.go:1879] skipping pod synchronization - PLEG is not healthy: pleg was last seen active 3m36.462980645s ago; threshold is 3m0s Jul 03 19:59:06 xxx-worker-004 kubelet[1946644]: E0703 19:59:06.293545 1946644 kubelet.go:1879] skipping pod synchronization - PLEG is not healthy: pleg was last seen active 3m41.463610227s ago; threshold is 3m0s Jul 03 19:59:07 xxx-worker-004 kubelet[1946644]: I0703 19:59:07.513240 1946644 setters.go:77] Using node IP: "xxxxxx" Jul 03 19:59:11 xxx-worker-004 kubelet[1946644]: E0703 19:59:11.294548 1946644 kubelet.go:1879] skipping pod synchronization - PLEG is not healthy: pleg was last seen active 3m46.464602826s ago; threshold is 3m0s Jul 03 19:59:16 xxx-worker-004 kubelet[1946644]: E0703 19:59:16.294861 1946644 kubelet.go:1879] skipping pod synchronization - PLEG is not healthy: pleg was last seen active 3m51.464916622s ago; threshold is 3m0s Jul 03 19:59:17 xxx-worker-004 kubelet[1946644]: I0703 19:59:17.585868 1946644 setters.go:77] Using node IP: "xxxxxx" -

节点短暂性 NotReady、且有获取 / kill container 状态失败的 log

--- container 状态失败的 log Jul 03 19:59:24 xxx-worker-004 kubelet[1946644]: E0703 19:59:24.854445 1946644 remote_runtime.go:295] ContainerStatus "bdf4dc0af526a317e248c994719eabb233a9db337d535351a277b1b324cf5fec" from runtime service failed: rpc error: code = DeadlineExceeded desc = context deadline exceeded Jul 03 19:59:24 xxx-worker-004 kubelet[1946644]: E0703 19:59:24.854492 1946644 kuberuntime_manager.go:969] getPodContainerStatuses for pod "dss-controller-pod-658c484975-zq9mh_dss(65f4d584-88df-4fb7-bf04-d2a20a4273e3)" failed: rpc error: code = DeadlineExceeded desc = context deadline exceeded Jul 03 19:59:25 xxx-worker-004 kubelet[1946644]: E0703 19:59:25.996630 1946644 kubelet_pods.go:1247] Failed killing the pod "dss-controller-pod-658c484975-zq9mh": failed to "KillContainer" for "dss-controller-pod" with KillContainerError: "rpc error: code = DeadlineExceeded desc = context deadline exceeded" --- PLEG is not healthy / Node became not ready 的 log Jul 03 20:02:24 xxx-worker-004 kubelet[1946644]: I0703 20:02:24.508392 1946644 kubelet.go:1948] SyncLoop (UPDATE, "api"): "dx-insight-stolon-keeper-1_dx-insight(895aded8-7556-4c00-aca5-6c6e7aacf7a2)" Jul 03 20:02:25 xxx-worker-004 kubelet[1946644]: E0703 20:02:25.989898 1946644 kubelet.go:1879] skipping pod synchronization - PLEG is not healthy: pleg was last seen active 3m0.135142317s ago; threshold is 3m0s Jul 03 20:02:26 xxx-worker-004 kubelet[1946644]: E0703 20:02:26.090013 1946644 kubelet.go:1879] skipping pod synchronization - PLEG is not healthy: pleg was last seen active 3m0.235263643s ago; threshold is 3m0s Jul 03 20:02:26 xxx-worker-004 kubelet[1946644]: E0703 20:02:26.290144 1946644 kubelet.go:1879] skipping pod synchronization - PLEG is not healthy: pleg was last seen active 3m0.435377809s ago; threshold is 3m0s Jul 03 20:02:26 xxx-worker-004 kubelet[1946644]: E0703 20:02:26.690286 1946644 kubelet.go:1879] skipping pod synchronization - PLEG is not healthy: pleg was last seen active 3m0.835524997s ago; threshold is 3m0s Jul 03 20:02:27 xxx-worker-004 kubelet[1946644]: E0703 20:02:27.490434 1946644 kubelet.go:1879] skipping pod synchronization - PLEG is not healthy: pleg was last seen active 3m1.63566563s ago; threshold is 3m0s Jul 03 20:02:28 xxx-worker-004 kubelet[1946644]: I0703 20:02:28.903852 1946644 setters.go:77] Using node IP: "xxxxxx" Jul 03 20:02:28 xxx-worker-004 kubelet[1946644]: I0703 20:02:28.966272 1946644 kubelet_node_status.go:486] Recording NodeNotReady event message for node xxx-worker-004 Jul 03 20:02:28 xxx-worker-004 kubelet[1946644]: I0703 20:02:28.966300 1946644 setters.go:559] Node became not ready: {Type:Ready Status:False LastHeartbeatTime:2023-07-03 20:02:28.966255129 +0800 CST m=+7095087.092396567 LastTransitionTime:2023-07-03 20:02:28.966255129 +0800 CST m=+7095087.092396567 Reason:KubeletNotReady Message:PLEG is not healthy: pleg was last seen active 3m3.111515826s ago; threshold is 3m0s} --- 每次 pleg 都有获取 container 状态失败的 log,也有在 pleg 之前的 log Jul 03 20:03:25 xxx-worker-004 kubelet[1946644]: E0703 20:03:25.881543 1946644 remote_runtime.go:295] ContainerStatus "bdf4dc0af526a317e248c994719eabb233a9db337d535351a277b1b324cf5fec" from runtime service failed: rpc error: code = DeadlineExceeded desc = context deadline exceeded Jul 03 20:03:25 xxx-worker-004 kubelet[1946644]: E0703 20:03:25.881593 1946644 kuberuntime_manager.go:969] getPodContainerStatuses for pod "dss-controller-pod-658c484975-zq9mh_dss(65f4d584-88df-4fb7-bf04-d2a20a4273e3)" failed: rpc error: code = DeadlineExceeded desc = context deadline exceeded Jul 03 20:06:26 xxx-worker-004 kubelet[1946644]: I0703 20:06:26.220050 1946644 kubelet.go:1948] SyncLoop (UPDATE, "api"): "dx-insight-stolon-keeper-1_dx-insight(895aded8-7556-4c00-aca5-6c6e7aacf7a2)" Jul 03 20:06:26 xxx-worker-004 kubelet[1946644]: E0703 20:06:26.989827 1946644 kubelet.go:1879] skipping pod synchronization - PLEG is not healthy: pleg was last seen active 3m0.108053792s ago; threshold is 3m0s Jul 03 20:06:27 xxx-worker-004 kubelet[1946644]: E0703 20:06:27.089940 1946644 kubelet.go:1879] skipping pod synchronization - PLEG is not healthy: pleg was last seen active 3m0.208169468s ago; threshold is 3m0s Jul 03 20:06:27 xxx-worker-004 kubelet[1946644]: E0703 20:06:27.290060 1946644 kubelet.go:1879] skipping pod synchronization - PLEG is not healthy: pleg was last seen active 3m0.408291772s ago; threshold is 3m0s Jul 03 20:06:27 xxx-worker-004 kubelet[1946644]: E0703 20:06:27.690186 1946644 kubelet.go:1879] skipping pod synchronization - PLEG is not healthy: pleg was last seen active 3m0.808415818s ago; threshold is 3m0s Jul 03 20:06:28 xxx-worker-004 kubelet[1946644]: E0703 20:06:28.490307 1946644 kubelet.go:1879] skipping pod synchronization - PLEG is not healthy: pleg was last seen active 3m1.608530704s ago; threshold is 3m0s Jul 03 20:06:30 xxx-worker-004 kubelet[1946644]: E0703 20:06:30.090657 1946644 kubelet.go:1879] skipping pod synchronization - PLEG is not healthy: pleg was last seen active 3m3.208885327s ago; threshold is 3m0s Jul 03 20:06:30 xxx-worker-004 kubelet[1946644]: I0703 20:06:30.557003 1946644 setters.go:77] Using node IP: "xxxxxx" Jul 03 20:06:30 xxx-worker-004 kubelet[1946644]: I0703 20:06:30.616041 1946644 kubelet_node_status.go:486] Recording NodeNotReady event message for node xxx-worker-004 -

当前状态下,节点可以创建 、删除 pod (约 2-3 份钟一次 pleg 报错,持续 1-2 分钟左右)

-

dockerd 相关日志 (某个容器异常后,触发了 dockerd 的 stream copy error: reading from a closed fifo 错误,20分钟后开始打 Pleg 日志 )

Sep 11 15:24:35 [localhost] kubelet: I0911 15:24:35.062990 3630 setters.go:77] Using node IP: "172.21.0.9" Sep 11 15:24:36 [localhost] dockerd: time="2023-09-11T15:24:36.535271938+08:00" level=error msg="stream copy error: reading from a closed fifo" Sep 11 15:24:36 [localhost] dockerd: time="2023-09-11T15:24:36.535330354+08:00" level=error msg="stream copy error: reading from a closed fifo" Sep 11 15:24:36 [localhost] dockerd: time="2023-09-11T15:24:36.536568097+08:00" level=error msg="Error running exec 18c5bcece71bee792912ff63a21b29507a597710736a131f03197fec1c44e8f7 in container: OCI runtime exec failed: exec failed: container_linux.go:380: starting container process caused: exec: \"ps\": executable file not found in $PATH: unknown" Sep 11 15:24:36 [localhost] dockerd: time="2023-09-11T15:24:36.825485167+08:00" level=error msg="stream copy error: reading from a closed fifo" Sep 11 15:24:36 [localhost] dockerd: time="2023-09-11T15:24:36.825494046+08:00" level=error msg="stream copy error: reading from a closed fifo" Sep 11 15:24:36 [localhost] dockerd: time="2023-09-11T15:24:36.826617470+08:00" level=error msg="Error running exec 6a68fb0d78ff1ec6c1c302a40f9aa80f0be692ba6971ae603316acc8f2245cf1 in container: OCI runtime exec failed: exec failed: container_linux.go:380: starting container process caused: exec: \"ps\": executable file not found in $PATH: unknown" Sep 11 15:24:38 [localhost] dockerd: time="2023-09-11T15:24:38.323407978+08:00" level=error msg="stream copy error: reading from a closed fifo" Sep 11 15:24:38 [localhost] dockerd: time="2023-09-11T15:24:38.323407830+08:00" level=error msg="stream copy error: reading from a closed fifo" Sep 11 15:24:38 [localhost] dockerd: time="2023-09-11T15:24:38.324556918+08:00" level=error msg="Error running exec 824f974debe302cea5db269e915e3ba26e2e795df4281926909405ba8ef82f10 in container: OCI runtime exec failed: exec failed: container_linux.go:380: starting container process caused: exec: \"ps\": executable file not found in $PATH: unknown" Sep 11 15:24:38 [localhost] dockerd: time="2023-09-11T15:24:38.636923557+08:00" level=error msg="stream copy error: reading from a closed fifo" Sep 11 15:24:38 [localhost] dockerd: time="2023-09-11T15:24:38.636924060+08:00" level=error msg="stream copy error: reading from a closed fifo" Sep 11 15:24:38 [localhost] dockerd: time="2023-09-11T15:24:38.638120772+08:00" level=error msg="Error running exec 5bafe0da5f5240e00c2e6be99e859da7386276d49c6d907d1ac7df2286529c1e in container: OCI runtime exec failed: exec failed: container_linux.go:380: starting container process caused: exec: \"ps\": executable file not found in $PATH: unknown" Sep 11 15:24:39 [localhost] kubelet: W0911 15:24:39.181878 3630 kubelet_pods.go:880] Unable to retrieve pull secret n-npro-dev/harbor for n-npro-dev/product-price-frontend-846f84c5b9-cpd4q due to secret "harbor" not found. The image pull may not succeed.

-

-

排查方向

-

节点负载不高

-

cpu/memory 正常范围内

-

dockerd 文件句柄 1.9+

lsof -p $(cat /var/run/docker.pid) |wc -l

-

-

容器数量对比其他节点也没有很多

-

docker ps / info 正常输出

-

发现有残留的 container 和 pasue 容器,手动 docker rm -f 无法删除(发现 up 容器可以 inspect、残留的不行)

-

最后通过 kill -9 $pid 杀了进程,残留容器被清理

ps -elF | egrep "进程名/PID" #别杀错了呦,大兄弟!!!

-

-

后续再遇到可以看下 containerd 的日志

journalctl -f -u containerd docker stats # 看是有大量的残留容器

-

-

dockerd 开启 debug 模式 - 已搜集

-

kubelet 在 调整为 v4 重启后报错

Jul 03 20:10:48 xxx-worker-004 kubelet[465378]: I0703 20:10:48.289216 465378 status_manager.go:158] Starting to sync pod status with apiserver Jul 03 20:10:48 xxx-worker-004 kubelet[465378]: I0703 20:10:48.289245 465378 kubelet.go:1855] Starting kubelet main sync loop. Jul 03 20:10:48 xxx-worker-004 kubelet[465378]: E0703 20:10:48.289303 465378 kubelet.go:1879] skipping pod synchronization - [container runtime status check may not have completed yet, PLEG is not healthy: pleg has yet to be successful]-

查看是否由于 apiserver 限流导致的 worker 节点短暂性 NotReady

- 重启后拿的数据,没有参考意义,3*master 数据最大的 18

-

残留容器的清理过层

- 残留容器 [root@dce-worker-002 ~]# docker ps -a | grep 'dss-controller' cd99bc468028 3458637e441b "/usr/local/bin/moni…" 25 hours ago Up 25 hours k8s_dss-controller-pod_dss-controller-pod-66c79dd5ff-nvmg6_dss_e04c891f-1101-401f-bbdc-65f08c6261b5_0 ed56fd84a466 172.21.0.99/kube-system/dce-kube-pause:3.1 "/pause" 25 hours ago Up 25 hours k8s_POD_dss-controller-pod-66c79dd5ff-nvmg6_dss_e04c891f-1101-401f-bbdc-65f08c6261b5_0 1ea1fc956e08 172.21.0.99/dss-5/controller "/usr/local/bin/moni…" 2 months ago Up 2 months k8s_dss-controller-pod_dss-controller-pod-ddb8dd4-h8xff_dss_745deb5c-3aa0-4584-a627-908d9fd142fb_0 74ea748ea198 172.21.0.99/kube-system/dce-kube-pause:3.1 "/pause" 2 months ago Exited (0) 25 hours ago k8s_POD_dss-controller-pod-ddb8dd4-h8xff_dss_745deb5c-3aa0-4584-a627-908d9fd142fb_0 - 清理过程 (通过 up 的容器获取到进程名,然后 ps 检索出来) [root@dce-worker-002 ~]# ps -elF | grep "/usr/local/bin/monitor -c" 4 S root 1428645 1428619 0 80 0 - 636 poll_s 840 15 Jul10 ? 00:01:55 /usr/local/bin/monitor -c 4 S root 2939390 2939370 0 80 0 - 636 poll_s 752 5 Sep11 ? 00:00:01 /usr/local/bin/monitor -c 0 S root 3384975 3355866 0 80 0 - 28203 pipe_w 972 23 16:04 pts/0 00:00:00 grep --color=auto /usr/local/bin/monitor -c [root@dce-worker-002 ~]# ps -elF | egrep "1428619|1428645" 0 S root 1428619 1 0 80 0 - 178392 futex_ 12176 22 Jul10 ? 01:47:37 /usr/bin/containerd-shim-runc-v2 -namespace moby -id 1ea1fc956e08ceecb1b729b2553e47ae8cb6dd954e4096afb23d604f814d3fb9 -address /run/containerd/containerd.sock # 这个是所有容器的父进程 containerd,不要杀了 4 S root 1428645 1428619 0 80 0 - 636 poll_s 840 3 Jul10 ? 00:01:55 /usr/local/bin/monitor -c 0 S root 3299955 1428619 0 80 0 - 209818 futex_ 12444 30 Aug13 ? 00:00:01 runc --root /var/run/docker/runtime-runc/moby --log /run/containerd/io.containerd.runtime.v2.task/moby/1ea1fc956e08ceecb1b729b2553e47ae8cb6dd954e4096afb23d604f814d3fb9/log.json --log-format json exec --process /tmp/runc-process119549742 --detach --pid-file /run/containerd/io.containerd.runtime.v2.task/moby/1ea1fc956e08ceecb1b729b2553e47ae8cb6dd954e4096afb23d604f814d3fb9/b1f66414652628a5e3eea0b59d7ee09c0a2a76bcb6f491e3d911536b310c430a.pid 1ea1fc956e08ceecb1b729b2553e47ae8cb6dd954e4096afb23d604f814d3fb9 0 R root 3387029 3355866 0 80 0 - 28203 - 980 30 16:05 pts/0 00:00:00 grep -E --color=auto 1428619|1428645 0 S root 3984582 1428645 0 80 0 - 838801 futex_ 36652 10 Aug13 ? 00:11:32 /usr/local/bin/controller -j dss-svc-controller.dss -a 10.202.11.63 -b 10.202.11.63 [root@dce-worker-002 ~]# kill -9 1428645 [root@dce-worker-002 ~]# kill -9 3984582

-

-

结论

-

节点中有残留的容器且 dokcer cli 无法正常删除、及 kubelet 获取容器状态时有 rpc failed 报错

-

在 kubelet 调整为 v4 level 的 log 日志后,重启 kubelet 也报 dockerd 检查异常

-

通过 kubelet 的监控来看,整个响应时间是在正常范围内的,因此 k8s 层面应该没有问题

通过以上结论,怀疑是 kubelet 调用 docker 去获取容器状态信息时异常导致的节点短暂性 NotReady,重启节点后恢复状态恢复正常

-

-

后续措施

- 监控 apiserver 性能数据,看是否有限流和响应慢的现象

- 优化集群中应用,发现应用使用了不存在 secret 来 pull 镜像,导致 pull 失败错误 1d 有 5w+条,增加了 kubelet 与 apiserver 通信的开销

-

做的不足的地方

- 没有拿 dockerd 堆栈的信息

- apiserver kubelet 的监控

- 在重启后看了 apiserver 是否有限流现象 (虽然嫌疑不大,worker 节点重启后 3*master 都不高)

- kubelet 的 relist 函数监控没有查看

环境信息搜集步骤

-

在不重启 dockerd 的情况下搜集 debug 日志和 堆栈 信息

- 开启 dockerd debug 模式 [root@worker03 ~]# vi /etc/docker/daemon.json { "storage-driver": "overlay2", "log-opts": { "max-size": "100m", "max-file": "3" }, "storage-opts": [ "overlay2.size=10G" ], "insecure-registries": [ "0.0.0.0/0" ], "debug": true, # 新增 "log-driver": "json-file" } kill -SIGHUP $(pidof dockerd) journalctl -f -u docker > docker-devbug.info - 打印堆栈信息 kill -SIGUSR1 $(pidof dockerd) cat docker-devbug.info | grep goroutine # 检索堆栈日志文件在哪个路径下 cat docker-devbug.info | grep datastructure # 这个我的环境没有 -

查看 apiserver 是否有限流的现象

- 获取具有集群 admin 权限的 clusterrolebinding 配置、或者自行创建对应的 clusterrole、clusterrolebinding、serviceaccount kubectl get clusterrolebindings.rbac.authorization.k8s.io | grep admin - 查看 clusterrolebinding 所使用的 serviceaccount 和 secret kubectl get clusterrolebindings.rbac.authorization.k8s.io xxx-admin -o yaml kubectl get sa -n kube-system xxx-admin -o yaml kubectl get secrets -n kube-system xxx-admin-token-rgqxl -o yaml echo "$token" | base64 -d > xxx-admin.token - 查看 apiserver 所有的 api 接口 、也可获取 kubelet 等其他组件的堆栈信息 curl --cacert /etc/daocloud/xxx/cert/apiserver.crt -H "Authorization: Bearer $(cat /root/xxx-admin.token)" https://$ip:16443 -k - 通过 metrics 接口查看监控数据 curl --cacert /etc/daocloud/xxx/cert/apiserver.crt -H "Authorization: Bearer $(cat /root/xxx-admin.token)" https://$ip:16443/metrics -k > apiserver_metrics.info - 查看这三个指标来看 apiserver 是否触发了限流 cat apiserver_metrics.info | grep -E "apiserver_request_terminations_total|apiserver_dropped_requests_total|apiserver_current_inflight_requests" - 通过 kubelet metrics 查看当时更新状态时卡在什么位置 curl --cacert /etc/daocloud/xxx/cert/apiserver.crt -H "Authorization: Bearer $(cat /root/xxx-admin.token)" https://127.0.0.1:10250/debug/pprof/goroutine?debug=1 -k curl --cacert /etc/daocloud/xxx/cert/apiserver.crt -H "Authorization: Bearer $(cat /root/xxx-admin.token)" https://127.0.0.1:10250/debug/pprof/goroutine?debug=2 -k -

通过 prometheus 监控查看 kubelet 性能数据

- pleg 中 relist 函数负责遍历节点容器来更新 pod 状态(relist 周期 1s,relist 完成时间 + 1s = kubelet_pleg_relist_interval_microseconds) kubelet_pleg_relist_interval_microseconds kubelet_pleg_relist_interval_microseconds_count kubelet_pleg_relist_latency_microseconds kubelet_pleg_relist_latency_microseconds_count - kubelet 遍历节点中容器信息 kubelet_runtime_operations{operation_type="container_status"} 472 kubelet_runtime_operations{operation_type="create_container"} 93 kubelet_runtime_operations{operation_type="exec"} 1 kubelet_runtime_operations{operation_type="exec_sync"} 533 kubelet_runtime_operations{operation_type="image_status"} 579 kubelet_runtime_operations{operation_type="list_containers"} 10249 kubelet_runtime_operations{operation_type="list_images"} 782 kubelet_runtime_operations{operation_type="list_podsandbox"} 10154 kubelet_runtime_operations{operation_type="podsandbox_status"} 315 kubelet_runtime_operations{operation_type="pull_image"} 57 kubelet_runtime_operations{operation_type="remove_container"} 49 kubelet_runtime_operations{operation_type="run_podsandbox"} 28 kubelet_runtime_operations{operation_type="start_container"} 93 kubelet_runtime_operations{operation_type="status"} 1116 kubelet_runtime_operations{operation_type="stop_container"} 9 kubelet_runtime_operations{operation_type="stop_podsandbox"} 33 kubelet_runtime_operations{operation_type="version"} 564 - kubelet 遍历节点中容器的耗时 kubelet_runtime_operations_latency_microseconds{operation_type="container_status",quantile="0.5"} 12117 kubelet_runtime_operations_latency_microseconds{operation_type="container_status",quantile="0.9"} 26607 kubelet_runtime_operations_latency_microseconds{operation_type="container_status",quantile="0.99"} 27598 kubelet_runtime_operations_latency_microseconds_count{operation_type="container_status"} 486 kubelet_runtime_operations_latency_microseconds{operation_type="list_containers",quantile="0.5"} 29972 kubelet_runtime_operations_latency_microseconds{operation_type="list_containers",quantile="0.9"} 47907 kubelet_runtime_operations_latency_microseconds{operation_type="list_containers",quantile="0.99"} 80982 kubelet_runtime_operations_latency_microseconds_count{operation_type="list_containers"} 10812 kubelet_runtime_operations_latency_microseconds{operation_type="list_podsandbox",quantile="0.5"} 18053 kubelet_runtime_operations_latency_microseconds{operation_type="list_podsandbox",quantile="0.9"} 28116 kubelet_runtime_operations_latency_microseconds{operation_type="list_podsandbox",quantile="0.99"} 68748 kubelet_runtime_operations_latency_microseconds_count{operation_type="list_podsandbox"} 10712 kubelet_runtime_operations_latency_microseconds{operation_type="podsandbox_status",quantile="0.5"} 4918 kubelet_runtime_operations_latency_microseconds{operation_type="podsandbox_status",quantile="0.9"} 15671 kubelet_runtime_operations_latency_microseconds{operation_type="podsandbox_status",quantile="0.99"} 18398 kubelet_runtime_operations_latency_microseconds_count{operation_type="podsandbox_status"} 323 -

如何通过 prometheus 监控 kube_apiserver

- 待补充 -

快速搜集节点当前性能数据信息

mkdir -p /tmp/pleg-log cd /tmp/pleg-log journalctl -f -u docker > dockerd.log journalctl -f -u containerd > dockerd.log ps -elF > ps.log top -n 1 > top.log pstree > pstree.log netstat -anltp > netsat.log sar -u > sar.cpu.log iostat > iostat.log iotop -n 2 > iotop.log top -n 1 >> top.log df -h > df.log timeout 5 docker ps -a > docker.ps.log timeout 5 docker stats --no-stream > docker.ps.log free -lm > free.log service kubelet status > kubelet.status service docker status > docker.status

podman containers 数据软链

停止 podman 容器

# 暂停容器

podman ps -q | xargs podman pause

5f375c9d1750

# 检查容器状态

podman ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

5f375c9d1750 docker.m.daocloud.io/kindest/node:v1.26.2 2 weeks ago Paused 0.0.0.0:443->30443/tcp, 0.0.0.0:8081->30081/tcp, 0.0.0.0:9000-9001->32000-32001/tcp, 0.0.0.0:16443->6443/tcp my-cluster-installer-control-plane

备份数据

# cp 数据到 /home/kind/podman-containers/ 目录下

mkdir -p /home/kind/podman-containers

cp -rf /var/lib/containers/* /home/kind/podman-containers/

# 备份数据

cd /var/lib/

tar -zcvf /home/kind/podman-containers/containers.tgz containers

清理数据

# 检查是否有 container 的 tmp 目录在挂载

df -hT | grep containers

shm tmpfs 63M 0 63M 0% /var/lib/containers/storage/overlay-containers/5f375c9d1750c5e5705467541ac92c23f6a338998cd1d2881dcdab1485a0be72/userdata/shm

fuse-overlayfs fuse.fuse-overlayfs 546G 220G 326G 41% /var/lib/containers/storage/overlay/0cec29426340872d7368db0571a3fc26731040f6e7a9ccd92e2442441f44de84/merged

# 有则 umount 掉,否则 rm 时会失败

umount /var/lib/containers/storage/overlay-containers/5f375c9d1750c5e5705467541ac92c23f6a338998cd1d2881dcdab1485a0be72/userdata/shm

umount /var/lib/containers/storage/overlay/0cec29426340872d7368db0571a3fc26731040f6e7a9ccd92e2442441f44de84/merged

# 删除数据

cd /var/lib/

rm -rf containers/*

rm: 无法删除"containers/storage/overlay": 设备或资源忙, # 貌似要多删除几次

配置软链并恢复容器

# 软链

ln -vs /home/kind/podman-containers/storage /var/lib/containers/storage

ln -vs /home/kind/podman-containers/docker /var/lib/containers/docker

ln -vs /home/kind/podman-containers/cache /var/lib/containers/cache

# 如何删除 软链

unlink /var/lib/containers/cache

# 查看结果

ls -l

总用量 0

lrwxrwxrwx. 1 root root 11 1月 24 15:38 cache -> /home/kind/podman-containers/cache

lrwxrwxrwx. 1 root root 12 1月 24 15:38 docker -> /home/kind/podman-containers/docker

lrwxrwxrwx. 1 root root 13 1月 24 15:41 storage -> /home/kind/podman-containers/storage

# 启动容器

podman unpause 5f375c9d1750

5f375c9d1750

# 检查容器状态

podman ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

5f375c9d1750 docker.m.daocloud.io/kindest/node:v1.26.2 2 weeks ago Up 2 weeks 0.0.0.0:443->30443/tcp, 0.0.0.0:8081->30081/tcp, 0.0.0.0:9000-9001->32000-32001/tcp, 0.0.0.0:16443->6443/tcp my-cluster-installer-control-plane

最后检查

# exec 进容器异常,需要手动重启下容器

podman exec -it 5f37 /bin/bash

Error: runc: exec failed: unable to start container process: exec: "/bin/bash": stat /bin/bash: no such file or directory: OCI runtime attempted to invoke a command that was not found

# 重启容器

podman restart 5f37

WARN[0010] StopSignal (37) failed to stop container my-cluster-installer-control-plane in 10 seconds, resorting to SIGKILL

ERRO[0013] Cleaning up container 5f375c9d1750c5e5705467541ac92c23f6a338998cd1d2881dcdab1485a0be72: unmounting container 5f375c9d1750c5e5705467541ac92c23f6a338998cd1d2881dcdab1485a0be72 storage: cleaning up container 5f375c9d1750c5e5705467541ac92c23f6a338998cd1d2881dcdab1485a0be72 storage: unmounting container 5f375c9d1750c5e5705467541ac92c23f6a338998cd1d2881dcdab1485a0be72 root filesystem: removing mount point "/var/lib/containers/storage/overlay/0cec29426340872d7368db0571a3fc26731040f6e7a9ccd92e2442441f44de84/merged": directory not empty

Error: OCI runtime error: runc: runc create failed: invalid rootfs: not an absolute path, or a symlink

# 重启后检查,容器运行正常

podman ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

5f375c9d1750 docker.m.daocloud.io/kindest/node:v1.26.2 2 weeks ago Up 22 seconds 0.0.0.0:443->30443/tcp, 0.0.0.0:8081->30081/tcp, 0.0.0.0:9000-9001->32000-32001/tcp, 0.0.0.0:16443->6443/tcp my-cluster-installer-control-plane

# 再次进容器,检查点火各组件pod 状态,均恢复正常

podman exec -it 5f37 /bin/bash

root@my-cluster-installer-control-plane:/# kubectl get node

NAME STATUS ROLES AGE VERSION

my-cluster-installer-control-plane Ready control-plane 17d v1.26.2

root@my-cluster-installer-control-plane:/# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-787d4945fb-fs7b6 1/1 Running 1 (31s ago) 17d

kube-system coredns-787d4945fb-hscmx 1/1 Running 1 (31s ago) 17d

kube-system etcd-my-cluster-installer-control-plane 1/1 Running 0 20s

kube-system kindnet-hmhfb 1/1 Running 2 (31s ago) 17d

kube-system kube-apiserver-my-cluster-installer-control-plane 1/1 Running 0 20s

kube-system kube-controller-manager-my-cluster-installer-control-plane 1/1 Running 1 (31s ago) 17d

kube-system kube-proxy-2lz72 1/1 Running 1 (31s ago) 16d

kube-system kube-scheduler-my-cluster-installer-control-plane 1/1 Running 1 (31s ago) 17d

kubean-system kubean-admission-5d4768f9cf-7tqqq 1/1 Running 1 (31s ago) 17d

kubean-system kubean-c6b8c997-zchfh 1/1 Running 1 (31s ago) 17d

kubean-system kubean-my-cluster-ops-job-w68tm 0/1 Completed 0 17d

local-path-storage local-path-provisioner-84f55fc489-m9hbf 1/1 Running 1 (31s ago) 17d

minio-system minio-5888c499dd-vxrpk 1/1 Running 1 (31s ago) 17d

museum-system chartmuseum-5db46b89f4-dv7tj 1/1 Running 1 (31s ago) 17d

registry-system registry-docker-registry-55666698c5-jkn7p 1/1 Running 1 (31s ago) 17d

执行 push/pull 验证

push 镜像

[root@g-master-all ~]# podman tag docker.m.daocloud.io/nginx:latest 10.29.14.27/docker.m.daocloud.io/nginx:latest

[root@g-master-all ~]# podman push 10.29.14.27/docker.m.daocloud.io/nginx:latest

Getting image source signatures

Copying blob 943132143199 done |

Copying blob 88ebb510d2fb done |

Copying blob 58045dd06e5b done |

Copying blob 32c977818204 done |

Copying blob f5fe472da253 done |

Copying blob 541cf9cf006d done |

Copying blob b57b5eac2941 done |

Copying config 9bea9f2796 done |

Writing manifest to image destination

[root@g-master-all ~]# podman push 10.29.14.27/docker.m.daocloud.io/nginx:latest

# 换节点 pull 镜像

[root@g-m-10-29-14-196 containers]# podman pull 10.29.14.27/docker.m.daocloud.io/nginx:latest --tls-verify=false

Trying to pull 10.29.14.27/docker.m.daocloud.io/nginx:latest...

Getting image source signatures

Copying blob b24790593a5b done |

Copying blob 0569eaf32db2 done |

Copying blob 0aaac86b6acc done |

Copying blob ab698a6d27cf done |

Copying blob ba27271778c3 done |

Copying blob 3bc4473eb8b1 done |

Copying blob 96cab5c185c1 done |

Copying config 9bea9f2796 done |

Writing manifest to image destination

9bea9f2796e236cb18c2b3ad561ff29f655d1001f9ec7247a0bc5e08d25652a1

kubelet 资源预留及限制

systemReserved

system-reserved用于为诸如sshd、udev等系统守护进程记述其资源预留值system-reserved也应该为kernel预留内存,因为目前kernel使用的内存并不记在 Kubernetes 的 Pod 上,也推荐为用户登录会话预留资源(systemd 体系中的user.slice)

kubeReserved

kube-reserved用来给诸如kubelet、containerd、节点问题监测器等 Kubernetes 系统守护进程记述其资源预留值

podPidsLimit

podPidsLimit用来给每个 Pod 中可使用的 PID 个数上限

kubelet-config.yaml

[root@worker-node-1 ~]# cat /etc/kubernetes/kubelet-config.yaml

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

nodeStatusUpdateFrequency: "10s"

failSwapOn: True

authentication:

anonymous:

enabled: false

webhook:

enabled: True

x509:

clientCAFile: /etc/kubernetes/ssl/ca.crt

systemReserved: # 给系统组件预留、生产计划 4c、8g

cpu: 1000m

memory: 2048Mi

kubeReserved: # 给 kube 组件预留、生产计划 12c、24g

cpu: 1000m

memory: 2048Mi

authorization:

mode: Webhook

staticPodPath: /etc/kubernetes/manifests

cgroupDriver: systemd

containerLogMaxFiles: 5

containerLogMaxSize: 10Mi

maxPods: 115

podPidsLimit: 12000 # 灵雀默认值 10000、为了保证组件及业务的稳定将其 +2000

address: 10.29.26.200

readOnlyPort: 0

healthzPort: 10248

healthzBindAddress: 127.0.0.1

kubeletCgroups: /system.slice/kubelet.service

clusterDomain: cluster.local

protectKernelDefaults: true

rotateCertificates: true

clusterDNS:

- 10.233.0.3

resolvConf: "/etc/resolv.conf"

eventRecordQPS: 5

shutdownGracePeriod: 60s

shutdownGracePeriodCriticalPods: 20s

# 默认值

Capacity:

cpu: 14

ephemeral-storage: 151094724Ki

hugepages-1Gi: 0

hugepages-2Mi: 0

memory: 28632864Ki

pods: 115

Allocatable:

cpu: 14

ephemeral-storage: 139248897408

hugepages-1Gi: 0

hugepages-2Mi: 0

memory: 28530464Ki

pods: 115

# 更改后

Capacity:

cpu: 14

ephemeral-storage: 151094724Ki

hugepages-1Gi: 0

hugepages-2Mi: 0

memory: 28632864Ki

pods: 115

Allocatable:

cpu: 12

ephemeral-storage: 139248897408

hugepages-1Gi: 0

hugepages-2Mi: 0

memory: 24336160Ki

pods: 115

LimitRange

pod 资源限额

Pod 最多能够使用命名空间的资源配额所定义的 CPU 和内存用量

namespace - resourceQuotas资源配额

[root@g-master1 ~]# kubectl get resourcequotas -n mawb -o yaml quota-mawb

apiVersion: v1

kind: ResourceQuota

metadata:

creationTimestamp: "2023-11-27T05:16:16Z"

name: quota-mawb

namespace: mawb

resourceVersion: "14278163"

uid: 60ae6d65-5ce6-4f8f-bee2-ec0db19a9785

spec:

hard: # 限制改 ns 下可以使用的资源、cpu、memory 哪个先到就先限制哪个资源

limits.cpu: "1"

limits.memory: "1073741824"

requests.cpu: "1"

requests.memory: "1073741824"

status:

hard:

limits.cpu: "1"

limits.memory: "1073741824"

requests.cpu: "1"

requests.memory: "1073741824"

used: # 当前 ns 下已经使用的资源、这里看 memory 已经到配额,再起新的 pod,会导致启动失败

limits.cpu: 500m

limits.memory: 1Gi

requests.cpu: 500m

requests.memory: 1Gi

pod - rangeLimit 资源限额

[root@g-master1 ~]# kubectl get limitranges -n mawb -o yaml limits-mawb

apiVersion: v1

kind: LimitRange

metadata:

creationTimestamp: "2023-11-27T05:16:16Z"

name: limits-mawb

namespace: mawb

resourceVersion: "14265593"

uid: 233b6dc7-e12a-4f2d-88f2-7c923a5cac7b

spec:

limits:

- default: # 定制默认限制

cpu: "1"

memory: "1073741824"

defaultRequest: # 定义默认请求

cpu: 500m

memory: 524288k

type: Container

示例应用

# deployment 副本数

[root@g-master1 ~]# kubectl get deployments.apps -n mawb

NAME READY UP-TO-DATE AVAILABLE AGE

resources-pod 2/4 0 2 33m

resources:

limits:

cpu: 250m

memory: 512Mi

requests:

cpu: 250m

memory: 512Mi

# pod 列表数

[root@g-master1 ~]# kubectl get pod -n mawb

NAME READY STATUS RESTARTS AGE

resources-pod-6b678fdc9f-957ft 1/1 Running 0 29m

resources-pod-6b678fdc9f-vngq7 1/1 Running 0 29m

# 新的 pod 启动报错、提示新的副本没有内存可用

kubectl describe rs -n mawb resources-pod-5b67cf49cf

Warning FailedCreate 15m replicaset-controller Error creating: pods "resources-pod-5b67cf49cf-6k6r5" is forbidden: exceeded quota: quota-mawb

requested: limits.cpu=1,limits.memory=1073741824,requests.memory=524288k # 需要在请求 512m 内存

used: limits.cpu=500m,limits.memory=1Gi,requests.memory=1Gi # 已用 cpu 500m、memory 1g

limited: limits.cpu=1,limits.memory=1073741824,requests.memory=1073741824 # 限制 cpu 1c、memory 1g

用户可通过 RBAC 进行权限控制,让不同 os 用户管理各自 namespace 下的资源

生成 configfile

#!/bin/bash

# 获取用户输入

read -p "请输入要创建的Namespace名称: " namespace

read -p "请输入要创建的ServiceAccount名称: " sa_name

read -p "请输入要创建的SecretName名称: " secret_name

read -p "请输入要生成的Config文件名称: " config_file_name

read -p "请输入Kubernetes集群API服务器地址: " api_server

# 创建Namespace

kubectl create namespace $namespace

# 创建ServiceAccount

kubectl create serviceaccount $sa_name -n $namespace

# 创建Secret

cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: Secret

type: kubernetes.io/service-account-token

metadata:

name: $secret_name

namespace: $namespace

annotations:

kubernetes.io/service-account.name: $sa_name

EOF

# 创建Role

cat <<EOF | kubectl apply -f -

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

namespace: $namespace

name: $sa_name-role

rules:

- apiGroups: [""]

resources: ["pods", "services", "configmaps"] # 根据需要修改资源类型

verbs: ["get", "list", "watch", "create", "update", "delete"]

EOF

# 创建RoleBinding

cat <<EOF | kubectl apply -f -

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: $sa_name-binding

namespace: $namespace

subjects:

- kind: ServiceAccount

name: $sa_name

namespace: $namespace

roleRef:

kind: Role

name: $sa_name-role

apiGroup: rbac.authorization.k8s.io

EOF

# 获取ServiceAccount的Token

#secret_name=$(kubectl get serviceaccount $sa_name -n $namespace -o jsonpath='{.secrets[0].name}')

token=$(kubectl get secret $secret_name -n $namespace -o jsonpath='{.data.token}' | base64 --decode)

# 生成Config文件

cat <<EOF > $config_file_name

apiVersion: v1

kind: Config

clusters:

- name: $namespace-cluster

cluster:

server: "https://$api_server:6443"

certificate-authority-data: "$(kubectl config view --raw -o jsonpath='{.clusters[0].cluster.certificate-authority-data}')"

contexts:

- name: $namespace-context

context:

cluster: $namespace-cluster

namespace: $namespace

user: $sa_name

current-context: $namespace-context

users:

- name: $sa_name

user:

token: $token

EOF

echo "RBAC和Config文件创建成功!"

ssh 192.168.1.110 "mkdir -p /home/$namespace/.kube/"

scp $config_file_name root@192.168.1.110:/home/$namespace/.kube/

ssh 192.168.1.110 "chmod 755 /home/$namespace/.kube/$config_file_name && cp /home/$namespace/.kube/$config_file_name /home/$config_file_name/.kube/config"

echo "Configfile远程copy完成,请用普通用户登录centos执行: alias k='kubectl --kubeconfig=.kube/config' 或将其追加到 .bashrc 并 source .bashrc"

使用

root@g-m-10-8-162-12 rbac]# bash gen_rbac.sh

请输入要创建的Namespace名称: mawb

请输入要创建的ServiceAccount名称: mawb

请输入要创建的SecretName名称: mawb

请输入要生成的Config文件名称: mawb

请输入Kubernetes集群API服务器地址: 192.168.1.110

Error from server (AlreadyExists): namespaces "mawb" already exists

error: failed to create serviceaccount: serviceaccounts "mawb" already exists

secret/mawb unchanged

role.rbac.authorization.k8s.io/mawb-role unchanged

rolebinding.rbac.authorization.k8s.io/mawb-binding unchanged

RBAC和Config文件创建成功!

mawb 100% 2629 7.9MB/s 00:00

Configfile远程copy完成,请用普通用户登录centos执行: alias k='kubectl --kubeconfig=.kube/config' 或将其追加到 .bashrc 并 source .bashrc

rbac 和 kubeconfig 汇总篇

配置 rbac

-

定义 clusterrole & clusterrolebinding